-

Notifications

You must be signed in to change notification settings - Fork 0

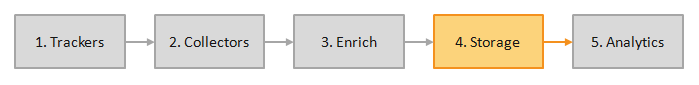

Storage documentation

HOME > [SNOWPLOW TECHNICAL DOCUMENTATION](Snowplow technical documentation) > [Storage](storage documentation)

The Enrichment process (module 3, in the diagram above) takes raw Snowplow collector logs (generated in module 2), tidies them up, enriches them (e.g. by adding Geo-IP data, and performing referer parsing) and then writes the output of that process back to S3 as a cleaned up set of Snowplow event files.

The data in these files can be analysed directly by any big data tool that runs on EMR. This includes Hive, Pig and Mahout.

In addition, the StorageLoader Ruby app can be used to copy Snowplow data from those event files into Amazon Redshift, where it can be analysed using any tool that talks to PostgreSQL. (This includes just about every analytics tool including R, Tableau and Excel.)

There are therefore a number of different potential storage modules that Snowplow users can store their data in, for analysis in different tools:

- S3, for analysis in EMR

- Amazon Redshift, for analysis using a wide range of analytics tools

- PostgreSQL: a useful alternative for companies that do not require Redshift's ability to scale to handle Petabytes of data

- Neo4J (coming soon): a graph database that enables more efficient and very detailed path analytics

- SkyDB (coming soon): an event database

- Infobright: an open source columnar database. This was supported in earlier versions of Snowplow (pre 0.8.0), but is not supported by the most recent version. We plan to add back support for Infobright at a later date.

In addition, this guide also covers the

- [Snowplow Canonical Event Model] canonical-event-model. This covers the structure of Snowplow data, as stored in S3, Amazon Redshift and PostgreSQL.

- The Storage Loader. This Ruby application is responsible for instrumenting the regular movement of data from S3 into Redshift. (And eventually, PostgreSQL, SkyDB, Neo4J, Infobright etc.)

Home | About | Project | Setup Guide | Technical Docs | Copyright © 2012-2013 Snowplow Analytics Ltd

HOME > [TECHNICAL DOCUMENTATION](Snowplow technical documentation)

1. Trackers

Overview

Javascript Tracker

No-JS Tracker

Lua Tracker

Arduino Tracker

2. Collectors

Overview

Cloudfront collector

Clojure collector (Elastic Beanstalk)

SnowCannon (node.js)

3. Enrich

Overview

EmrEtlRunner

Scalding-based Enrichment Process

C. Canonical Snowplow event model

4. Storage

Overview

[Storage in S3](S3 storage)

Storage in Redshift

Storage in PostgreSQL

Storage in Infobright (deprecated)

The StorageLoader

D. Snowplow storage formats (to write)

5. Analytics

Analytics documentation

Common

Artifact repositories