Can a machine draw sketches like humans do? The paper A Neural Representation of Sketch Drawings presents a generative RNN which is capable of producing sketches of common objects, with the goal of training a machine to draw and generalize abstract concepts in a manner similar to humans. This repository presents examples of a simple sketchs (ex: ladder) to harder sketches (ex: cat).

The implementation is ported from the official Tensorflow implementation that was released under project Magenta by the authors.

While there is a already a large body of existing work on generative modelling of images using neural networks, most of the work focuses on modelling raster images represented as a 2D grid of pixels. But this project presents a lower-dimensional vector-based representation inspired by how people draw.

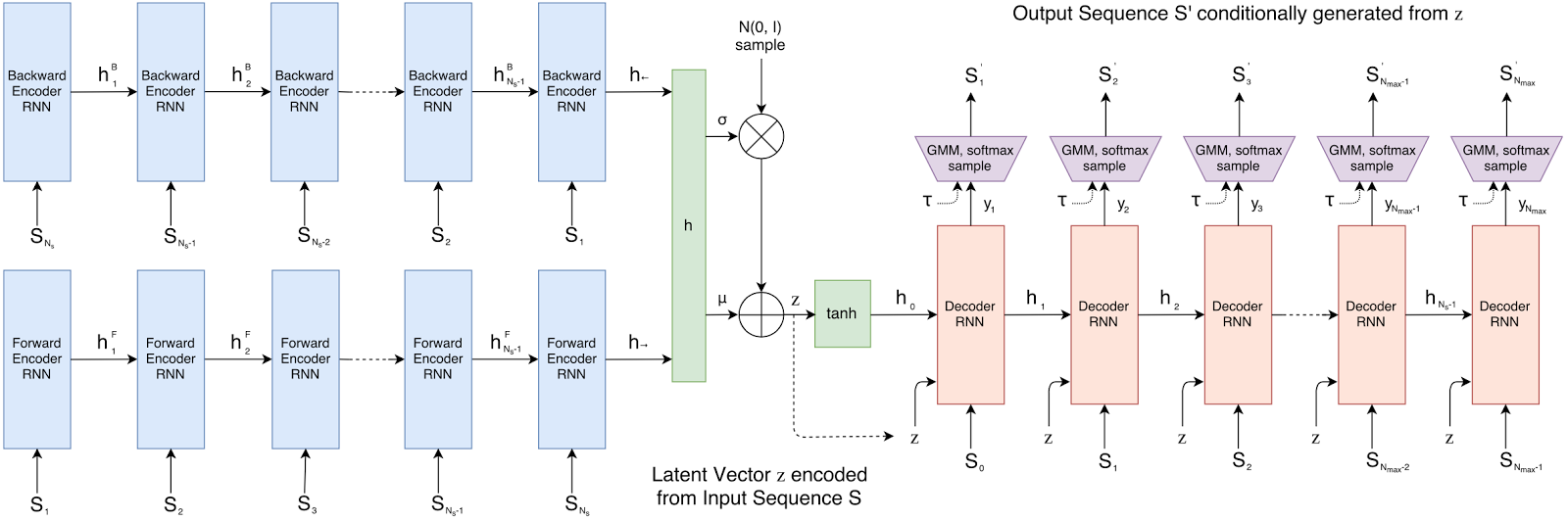

This project is based a Sequence to Sequence Variational Autoencoder (Seq2SeqVAE) in which we encode strokes (of a sketch) into a latent vector space, using a bidirectional LSTM as the encoder. The latent representation can then be decoded back into a series of strokes.

The goal of a seq2seq autoencoder is to train a network to encode an input sequence into a vector of floating point numbers, called a latent vector, and from this latent vector reconstruct an output sequence using a decoder that replicates the input sequence as closely as possible. Since encoding is performed stochastically, and so is the sampling mechanism of the decoder, the reconstructed sketches are always different.

- Tensorflow

- Python 3.8

- Ubuntu

- Google Colab

To train the model, the dataset required are available in the Google Cloud Platform. The dataset collected is from a game called quickdraw. The format of data is stroke 3 format which consists of x and y coordinates and a ‘0’ or ‘1’ denoting pen down and pen up.

The model can be trained directly from the SketchGen.ipynb or can be locally run in the terminal using the below command

$ python3 seq2seqVAE_train.py <optional arguments>--data_dir = datasets

--experiment_dir = experiments

--epochs = 20NOTE: There are many more hyperparameters available in the file seq2seqVAE.py which can be modified.

You can use the SketchGen.ipynb by providing the appropriate paths to all files and explore the sketchs in depth. There are examples of encoding and decoding of sketches, interpolating in latent space, sampling under different temperature values etc.

The Repo contains 4 pre trained models on Encoder_Size=256, Decoder_Size=512 latent_vector_space=128

- Cat (20 Epochs)

- Ladder (20 Epochs)

- Sun (20 Epochs)

- Leaf (20 Epochs)

NOTE [June, 2021]: Datasets and Pre-trained models have been removed.

- David Ha and Douglas Eck, A Neural Representation of Sketch Drawings, 2017

- David Ha, Teaching Machines to Draw, 2011

- Official Tensoflow Implementation released under Project Magenta