Welcome to the BYOL (Bootstrap Your Own Latent) repository! Dive into our comprehensive, from-scratch implementation of BYOL—a self-supervised contrastive learning algorithm that's transforming how we approach unsupervised feature learning.

Before running the code, ensure you have the following dependencies:

- Python 3: The language used for implementation.

- PyTorch: The deep learning framework powering our model training and evaluation.

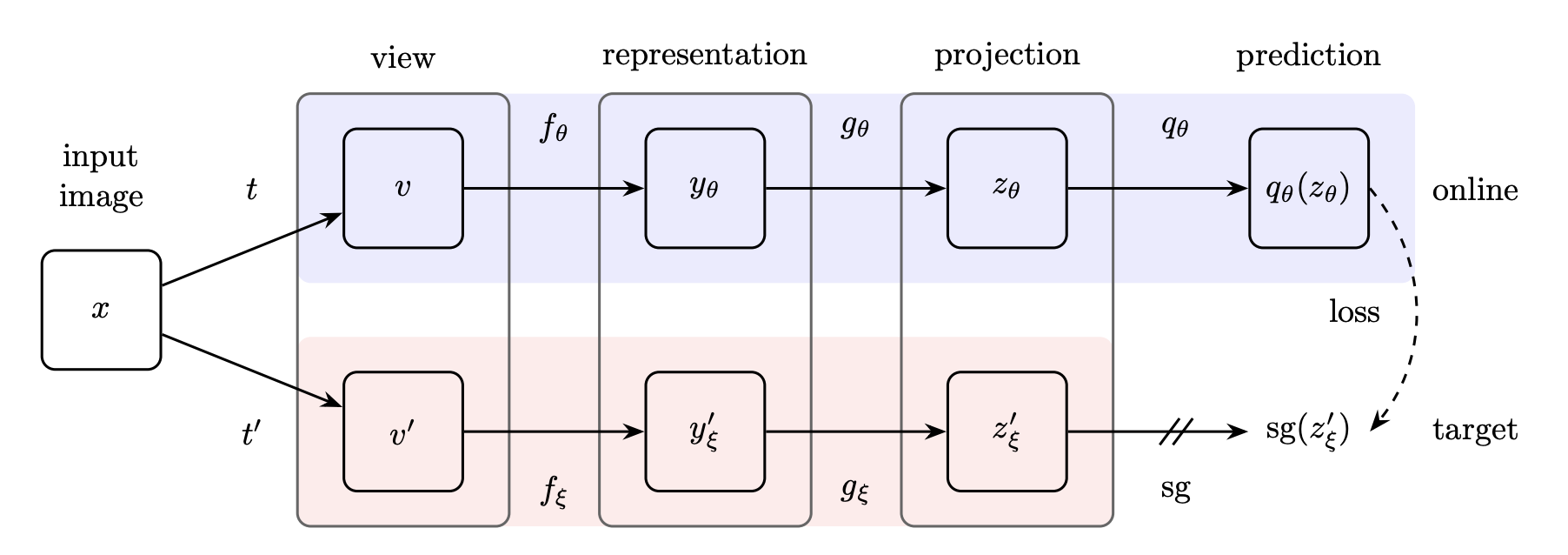

BYOL represents a breakthrough in self-supervised learning. Unlike traditional methods that rely on negative samples for contrastive learning, BYOL focuses solely on positive pairs—images of the same instance with different augmentations. This unique approach simplifies training and minimizes computational requirements, achieving remarkable results.

- No Negative Samples Required: Efficient training by focusing exclusively on positive pairs.

- State-of-the-Art Results: Achieves impressive performance on various image classification benchmarks.

We use the STL10 dataset to evaluate our BYOL implementation. This dataset is tailored for developing and testing unsupervised feature learning and self-supervised learning models.

- Overview: Contains 10 classes, each with 500 training images and 800 test images.

- Source: STL10 Dataset

Our experiments highlight the impact of BYOL pretraining on the STL10 dataset:

- Without Pretraining: Baseline accuracy of 84.58%.

- With BYOL Pretraining: Accuracy improved to 87.61% after 10 epochs, demonstrating BYOL’s effectiveness.

This repository features a complete, from-scratch implementation of BYOL. For our experiments, we used a ResNet18 model pretrained on ImageNet as the encoder, showcasing how leveraging pretrained models can further enhance BYOL’s capabilities.