Simply copy both files tbp_main.py and tbp_lib.py into a folder; open the main file in an editor, add the path info, and change the parameters. I might make a version with flags later, but I like to see the possible options. You will need to install the dependencies numpy and PIL.

This is a project I have been working on and off for more than a decade (the earliest version I have on my drive is from 2008 and was started in Processing, but I let it rest for quite a while). The basic idea is to conceive of a photograph as a function over the time of a video file. While a photograph is a projection of a point in time onto a static image, time based photography, as I understand it, compresses a temporal sequence into such a static image.

The project works in two ways:

For each frame of a video file, a single vertical strip of a pretermined width is extracted; the sum of these strips is concatenated and returned as an image file.

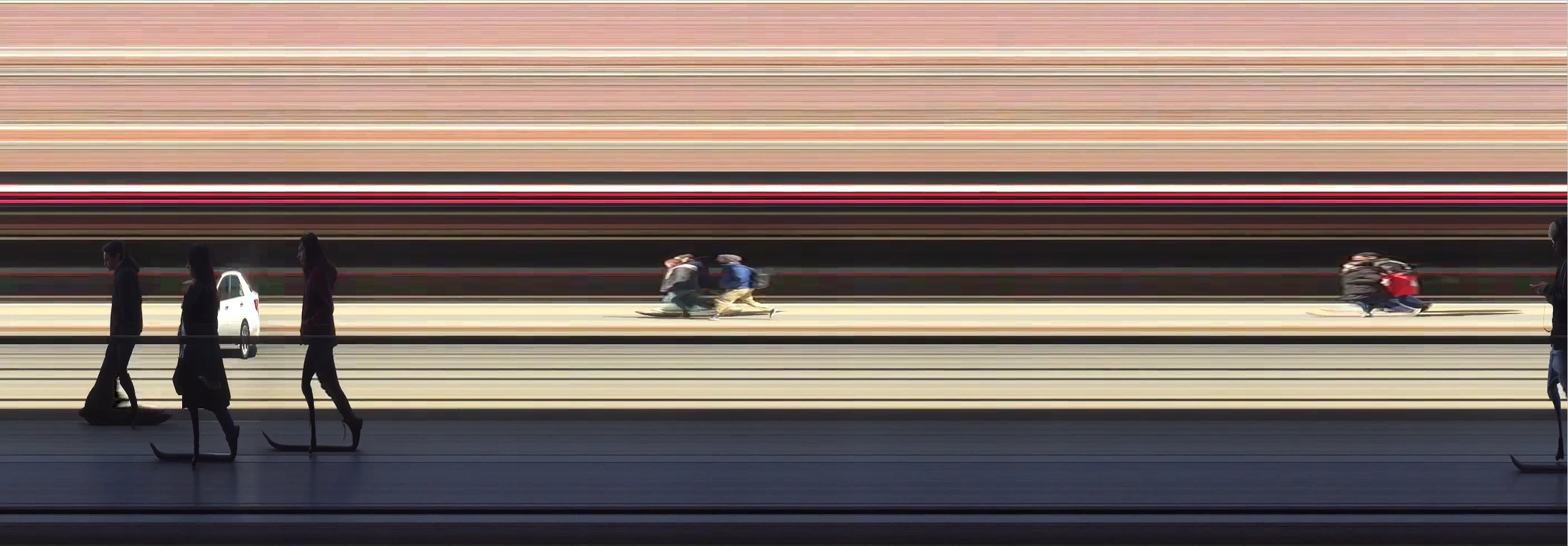

Here is an example of a person walking past my window.

**Input video (recorded in slow motion): **

window.mp4

**Output image: **

The output input is a function of time of the input video; as the video is static, only the differential movements are captured in the image. Notice that this translates to interestingly distortions where some parts are longer in one place than others (the feet), and to a reversal of directions: Even though the car is passing by from right to left in the video, in the image, it appears to drive from left to right. The same with the people, who also walk in different directions but in the image appear to face the same way. The reason for this is that all movements are mapped continuously onto the image. This video helps to understand how it works:

window_composite.mp4

Here, it is best to use slow-motion footage so that ideally each frame of the output image covers the movement of one pixel in the video. The movement mapping and its distortion are especially interesting for moving bodies. This is, for example, how I made my profile image:

Input video:

turn.mov

Output image:

(The video was longer, I turned more than once. Notice how I sometimes stray too far from my axis so that, for instance, my mouth appears twice, hanging in mid-air.)

Another approach is to move the camera so as to avoid the background repetition. Here, the effect is similar to the "panorama" feature on many cell phones. Since here we don't have a single vanishing point, this is also the reduction of perspectival space in a static image, in effect producing an isomorphic image (or at least an image with more than one vanishing point).

Here is a video of my old stop at 125th St in New York turned into an image. (This time, the "slices" are wider than one pixel as this was taken in, I think, 2013 before the iPhone allowed slo-mo.)

Input video:

125th-trim.mov

Output video:

Here is another, smoother image from a slo-mo video.

Input video:

trainride.mp4

Output video:

You can see how objects moving in the foreground move faster and are thus compressed, while those in the background, moving slower, are extended. Notice also how the straight bridge becomes curved due to the differential movement. (Panofsky would have liked this.)

##2) Image(time) -> Video(perspective): Operation 1) is repeated for every possible vertical "slice".

For each of the images above, I had to make the decision which pixel on the x axis I chose as the position of the "slice." For instance, for the very first image, I chose the center slice (the vertical line equidistant from each of first and the last row of pixels in the image; you can see this in the composite video well, where I had to cut the video in half to show how it maps onto the image).

But for an image of a width of n pixels, there are also n possible positions for these "slices." The output will be different as time passes between the first and the last, be it the camera or an object; also, especially for videos with a lot of depth, there is a slight difference in perspective between the first and the last "slice."

For example, this is the output for the video from above of the 125th St stop, taken at difference "slice" positions (the video was 1080 pixel wide):

Very first column of pixels (n=0):

Center column of pixels (n=540):

Last column of pixels (n=1080):

This makes intuitive sense of you remember that this is taken from a moving train: The corner of the building in the middle would move in and out of frame, first only as an edge blocking the view into the street, then lining up with the perspective of the street, and finally showing the facades of the street. Since the last column of pixels captures the first phase and the first column (as the camera is moving from left to right) the last phase of that movement (time having passed), the perspectives are different.

Now, since this can be done for all n columns of pixels of a video, one can turn the total of all these slightly different images into a video again. The result is something like a derivative of the video's time function.

Here it is for the 125th St stop (still a bit blocky):

125th.mp4

(This was an early attempt; I made a mistake in the script so the last few slices are repeated.)

And here is the "derivative" for the other train video:

trainride_derivative.mp4

I like how the fact that the background in the video moves slower than the foreground translates into the reverse: Now it is the background that moves while the foreground remains static - it is as if the perspective had been flipped in some way I don't quite have a name for yet.

This works also for videos where the camera remains static. Here is a similar shot of my old street as above:

Untitled.mp4

Two things are interesting here: First, the slow black flicker on the bottom half of the screen are the vertical bars of the fence you see in the first video. And second, you can see how the people in the background appear to go backwards - they are still flipped but now move in the correct direction!

And here is a similar shot as before with me rotating:

rotate.mp4

Recently, I have been combining the static and the moving approach by mounting my phone on a slow rotating tripod. This way, you get both the background as well as moving objects without either of them blurring. The results can be absolutely freaky, as in this street scene from Berlin:

Input video:

prenzlauer_berg_orig.mp4

**Output video: **

prenzlauer_berg_output.mp4

To me, this looks insane - the combination of both approaches makes them look like they are floating, and the perspectival stretching/compressing makes the jogger smaller than the lamppost he is jogging in front of.