diff --git a/README.md b/README.md

index b236c6d7..a8c64248 100644

--- a/README.md

+++ b/README.md

@@ -59,6 +59,8 @@ Geochemistry π was selected for featuring as an Editor’s Highlight in EOS

Eos Website: https://eos.org/editor-highlights/machine-learning-for-geochemists-who-dont-want-to-code.

+

+

## Quick Installation

Our software is well tested on **macOS** and **Windows** system with **Python 3.9**. Other systems and Python version are not guranteed.

diff --git a/docs/source/For Developer/Add New Model To Framework.md b/docs/source/For Developer/Add New Model To Framework.md

index 3b3eb0c2..d2276ea9 100644

--- a/docs/source/For Developer/Add New Model To Framework.md

+++ b/docs/source/For Developer/Add New Model To Framework.md

@@ -1,276 +1,230 @@

# Add New Model To Framework

+## Table of Contents

+- [1. Framework - Design Pattern and Hierarchical Pipeline Architecture](#1-design-pattern)

+- [2. Understand Machine Learning Algorithm](#2-understand-ml)

+- [3. Construct Model Workflow Class](#3-construct-model)

+ - [3.1 Add Basic Elements](#3-1-add-basic-element)

+ - [3.1.1 Find File](#3-1-1-find-file)

+ - [3.1.2 Define Class Attributes and Constructor](#3-1-2-define-class-attributes-and-constructors)

+ - [3.2 Add Manual Hyperparameter Tuning Functionality](#3-2-add-manual)

+ - [3.2.1 Define manual_hyper_parameters Method](#3-2-1-define-manaul-method)

+ - [3.2.2 Create _algorithm.py File](#3-2-2-create-file)

+ - [3.3 Add Automated Hyperparameter Tuning (AutoML) Functionality](#3-3-add-automl)

+ - [3.3.1 Add AutoML Code to Model Workflow Class](#3-3-1-add-automl-code)

+ - [3.4 Add Application Function to Model Workflow Class](#3-4-add-application-function)

+ - [3.4.1 Add Common Application Functions and common_components Method](#3-4-1-add-common-function)

+ - [3.4.2 Add Special Application Functions and special_components Method](#3-4-2-add-special-function)

+ - [3.4.3 Add @dispatch() to Component Method](#3-4-3-add-dispatch)

+ - [3.5 Storage Mechanism](#3-5-storage-mechanism)

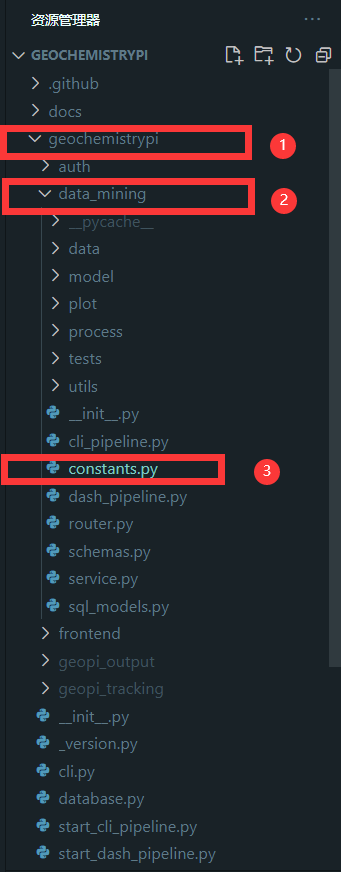

+- [4. Instantiate Model Workflow Class](#4-instantiate-model-workflow-class)

+ - [4.1 Find File](#4-1-find-file)

+ - [4.2 Import Module](#4-2-import-module)

+ - [4.3 Define activate Method](#4-3-define-activate-method)

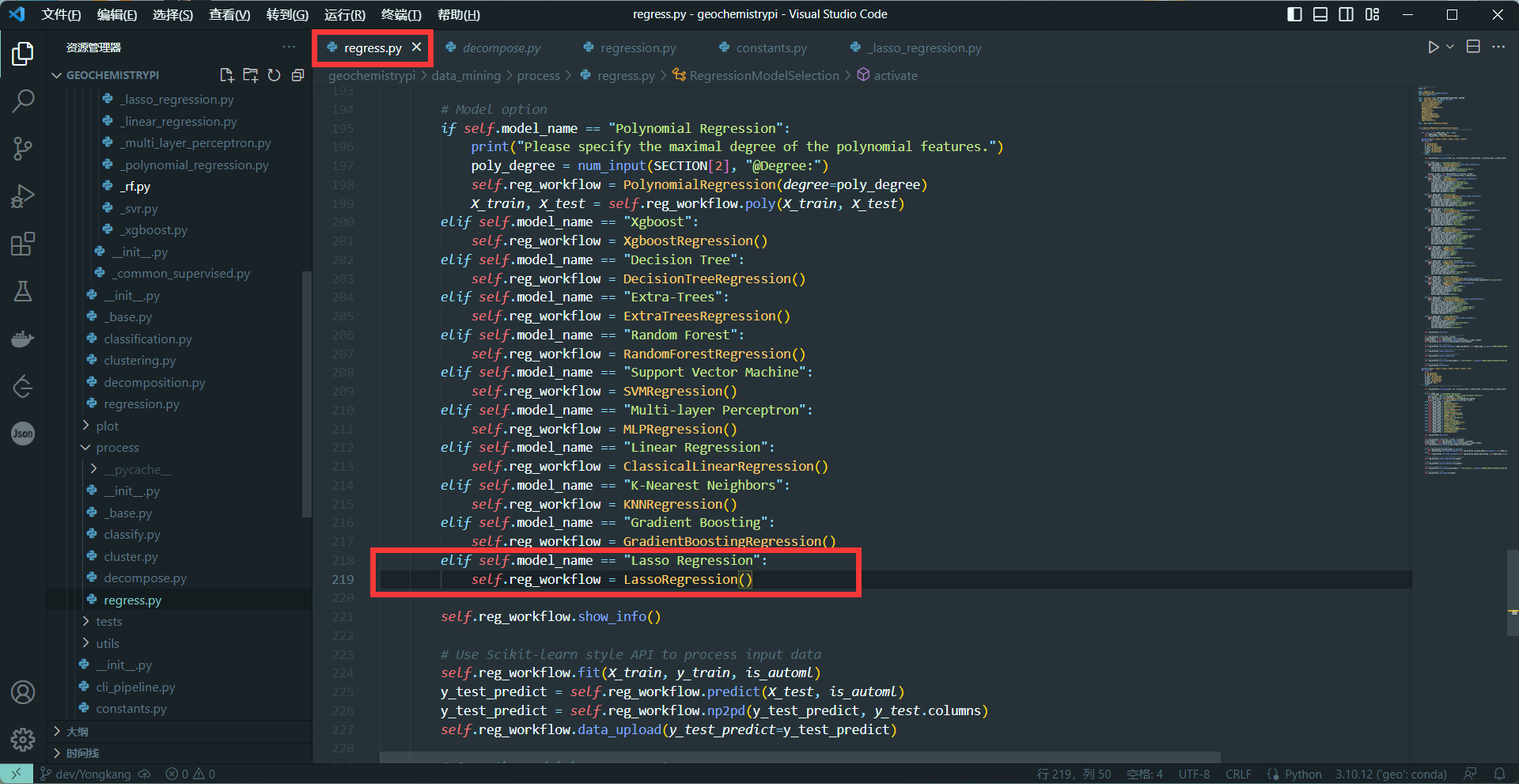

+ - [4.4 Create Model Workflow Object](#4-4-create-model-workflow-object)

+ - [4.5 Invoke Other Methods in Scikit-learn API Style](#4-5-invoke-other-methods)

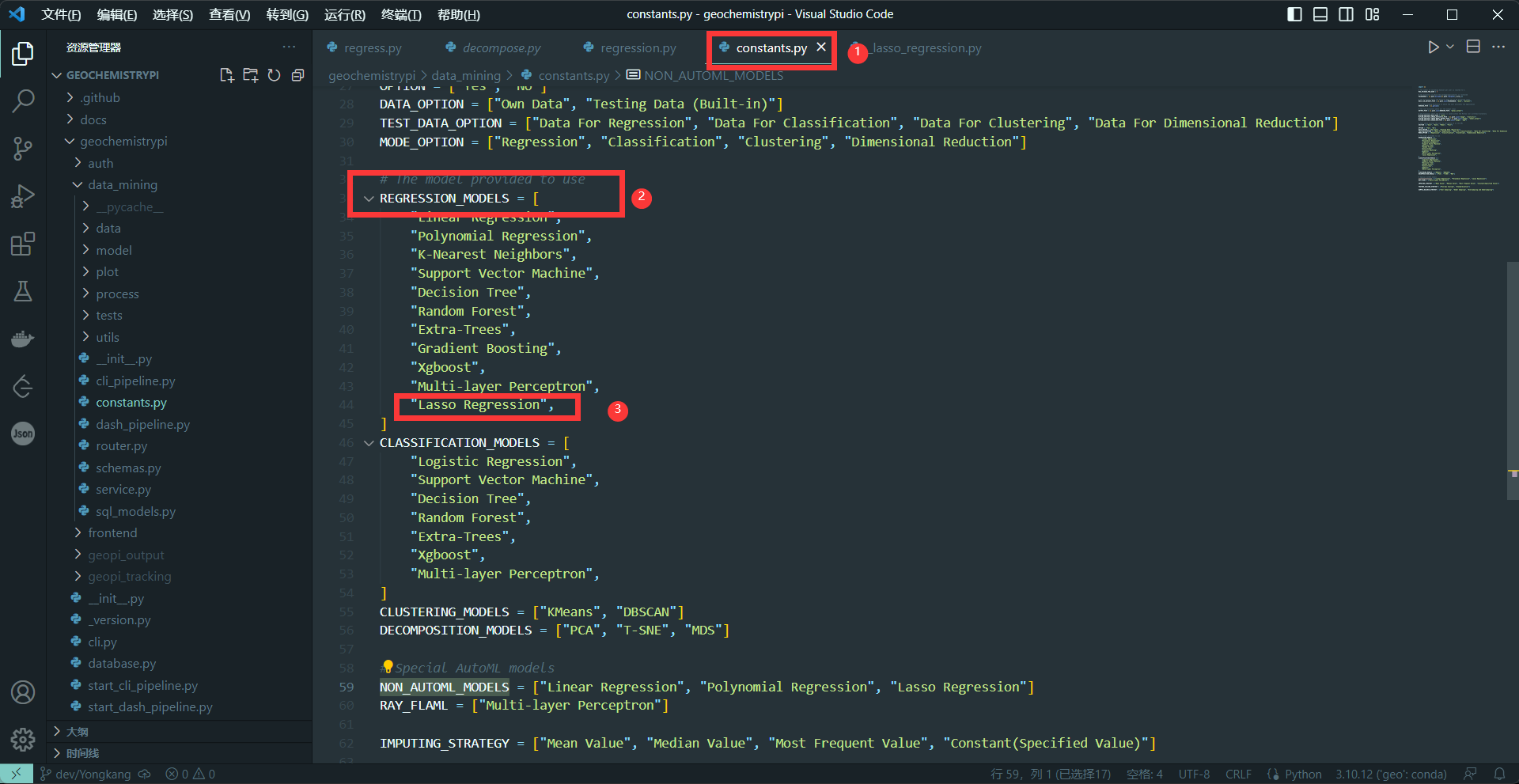

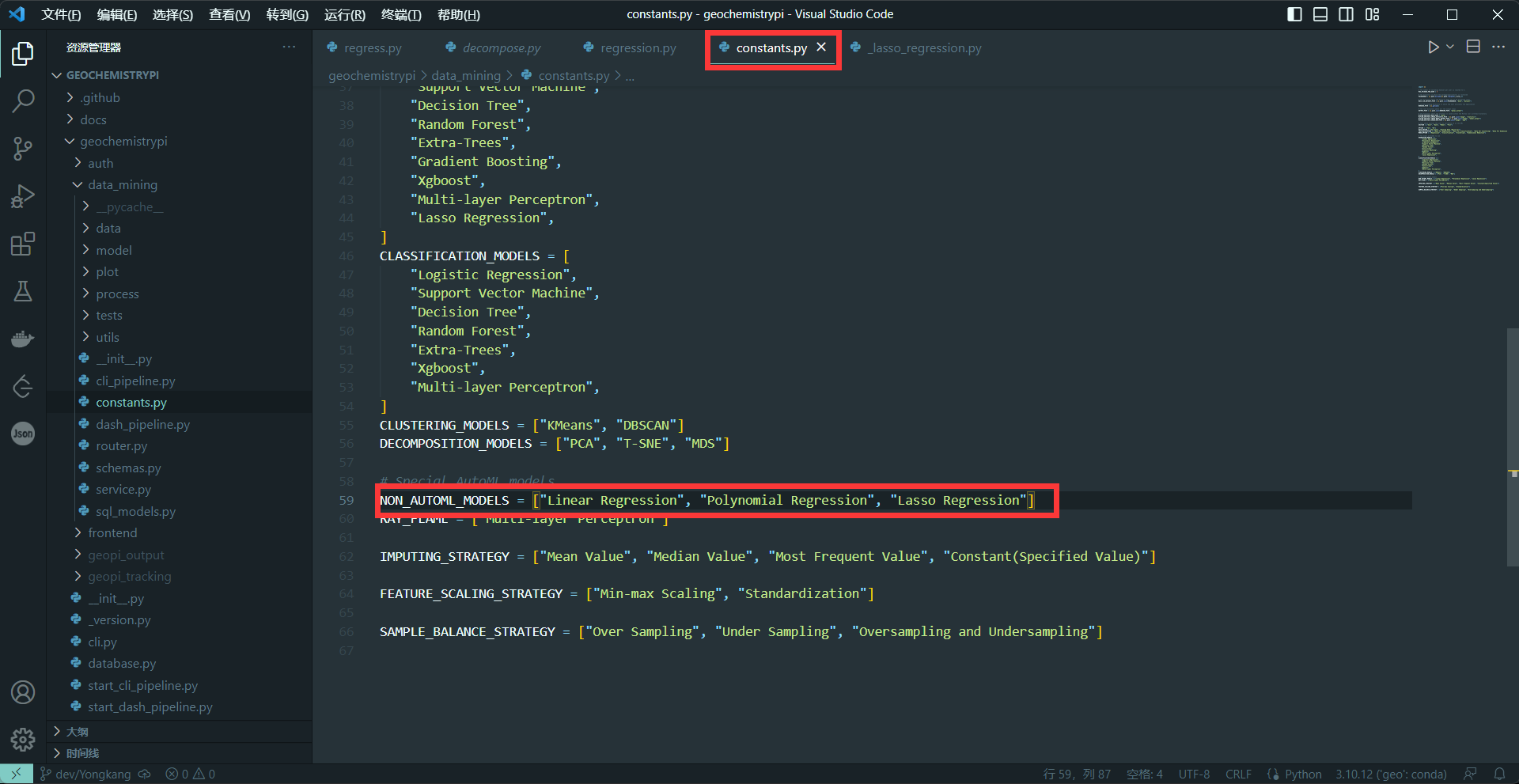

+ - [4.6 Add model_name to MODE_MODELS or NON_AUTOML_MODELS](#4-6-add-model-name)

+- [5. Test Model Workflow Class](#5-test-model)

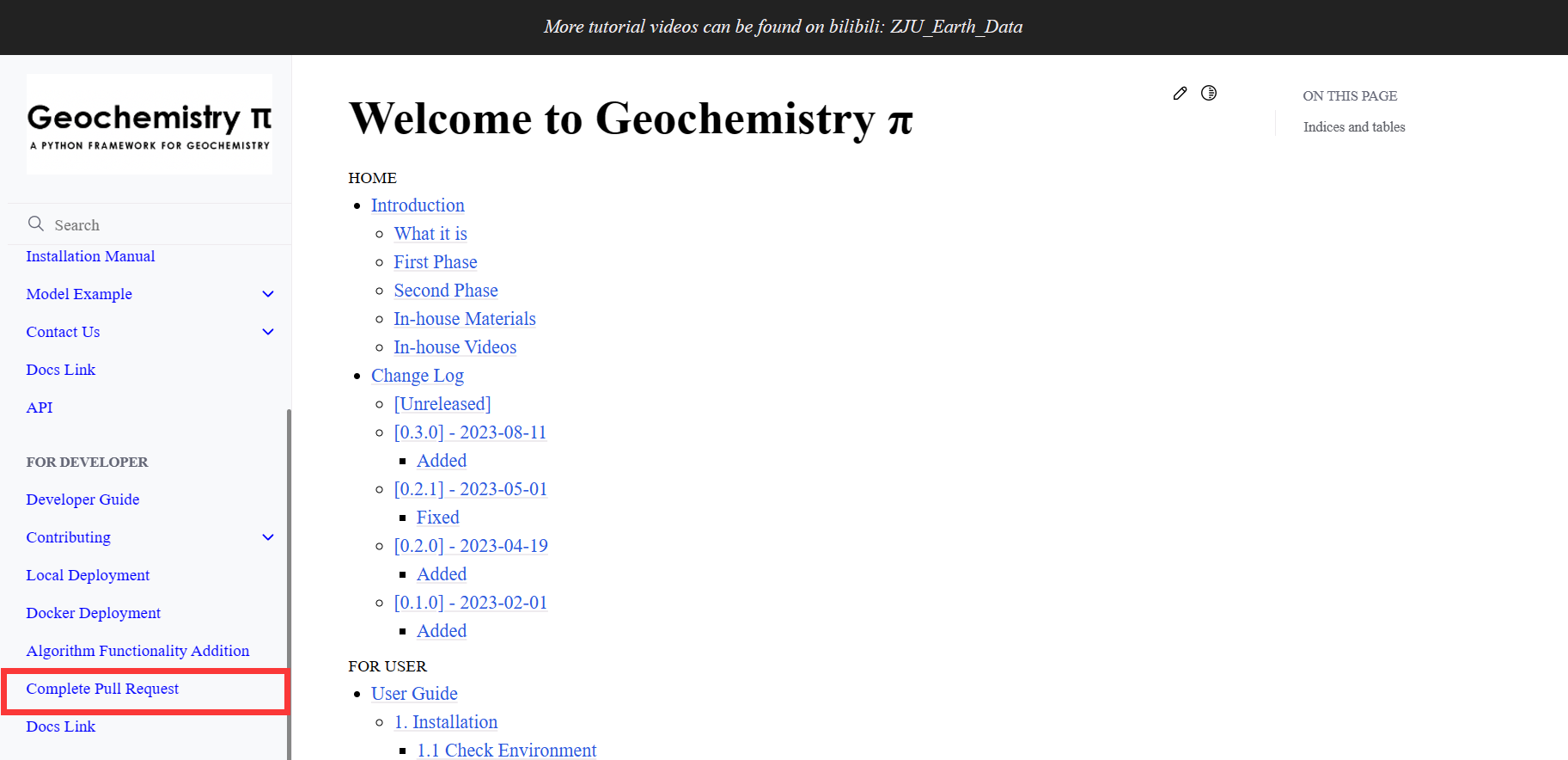

+- [6. Completed Pull Request](#6-completed-pull-request)

+- [7. Precautions](#7-precautions)

-## Table of Contents

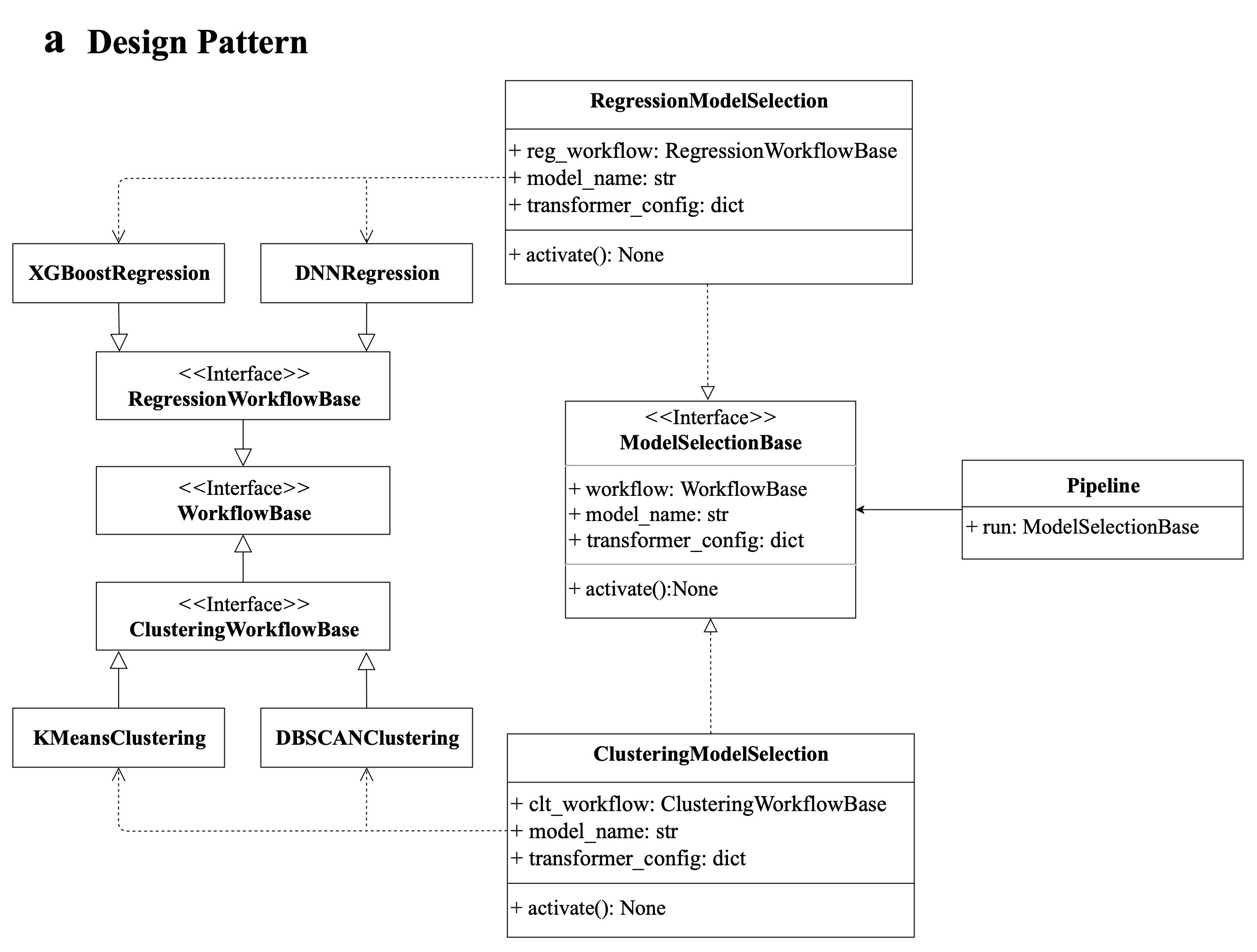

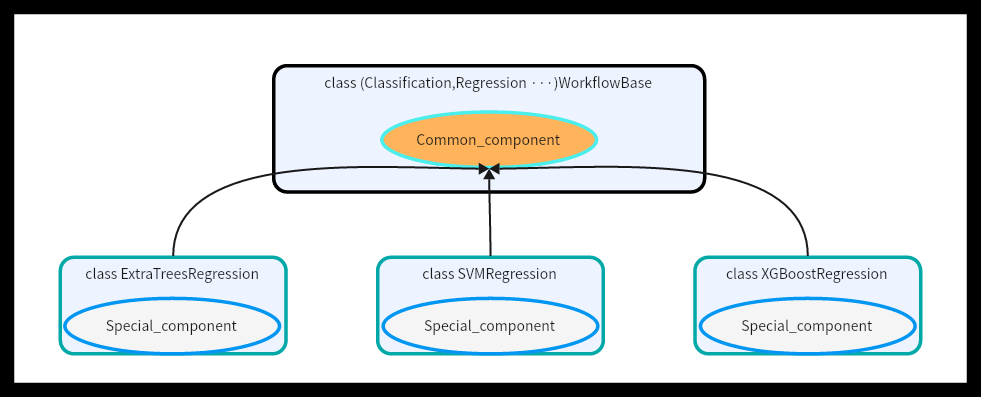

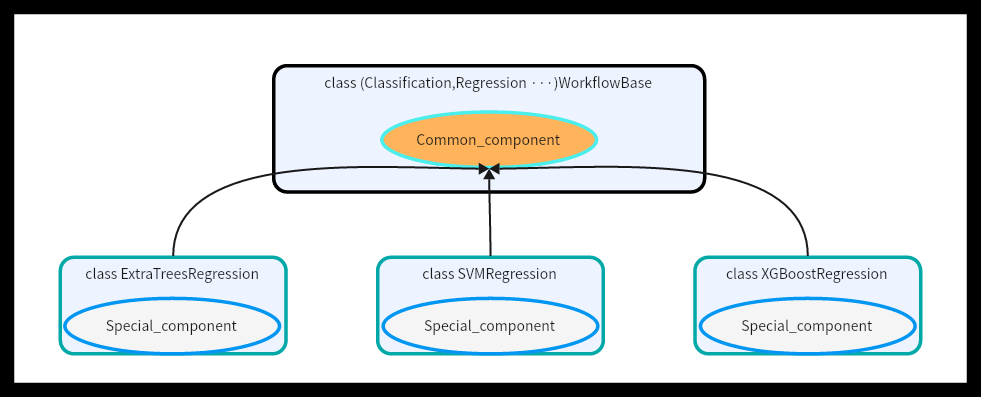

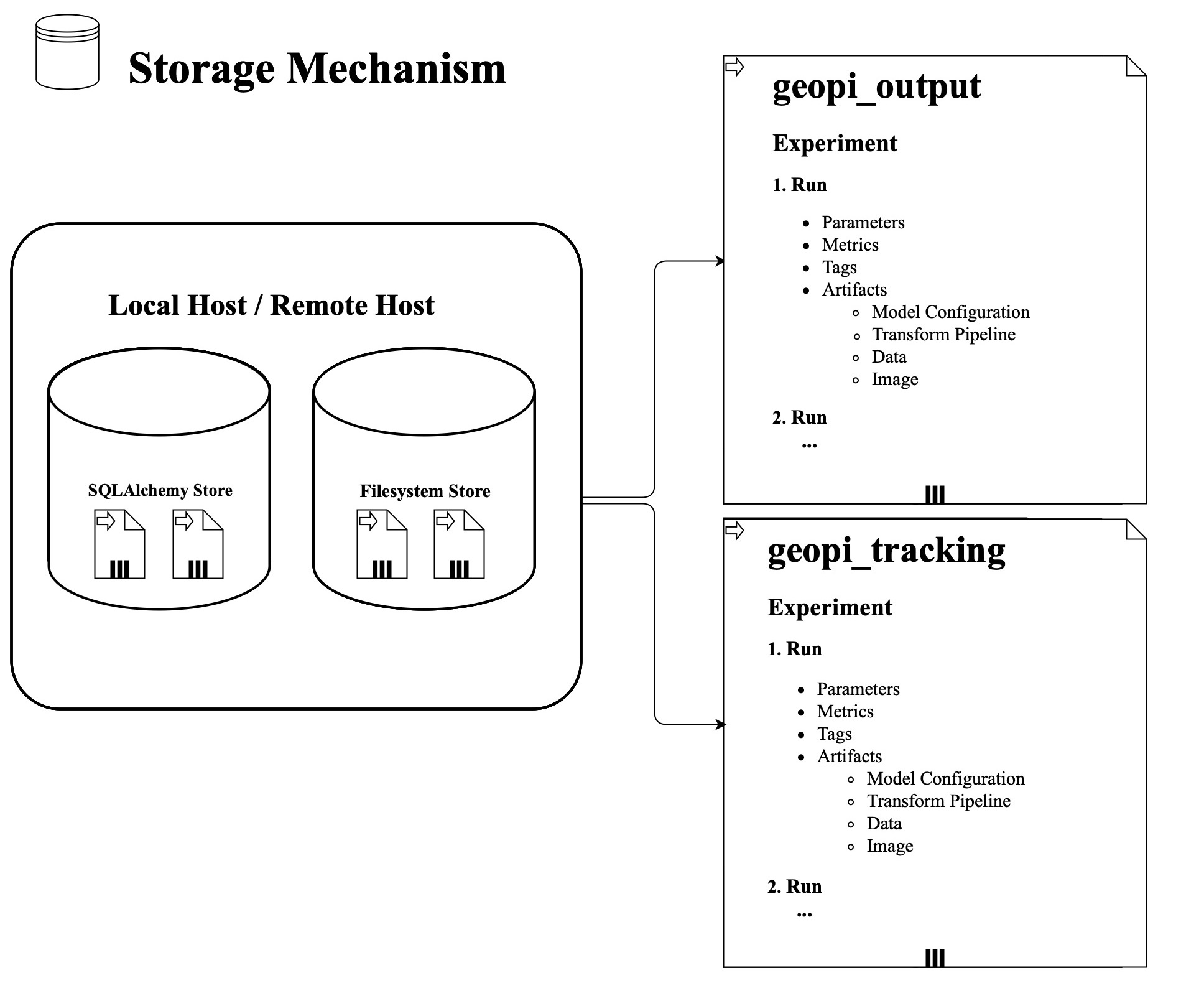

+## 1. Framework - Design Pattern and Hierarchical Pipeline Architecture

+

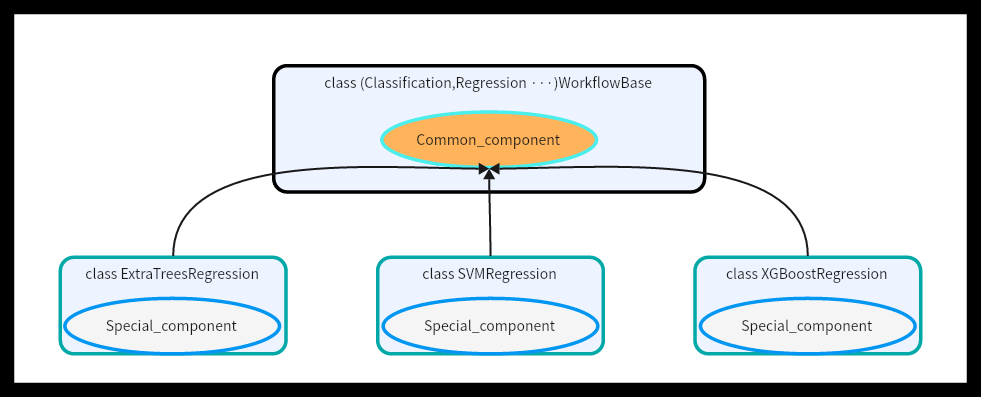

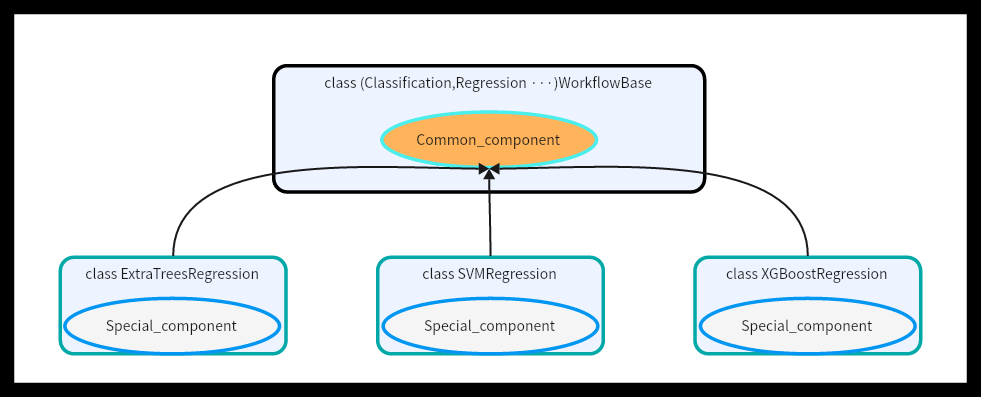

+Geochemistry π refers to the software design pattern "Abstract Factory", serving as the foundational framework upon which our advanced automated ML capabilities are built.

+

+

+

-- [1. Understand the model](#1-understand-the-model)

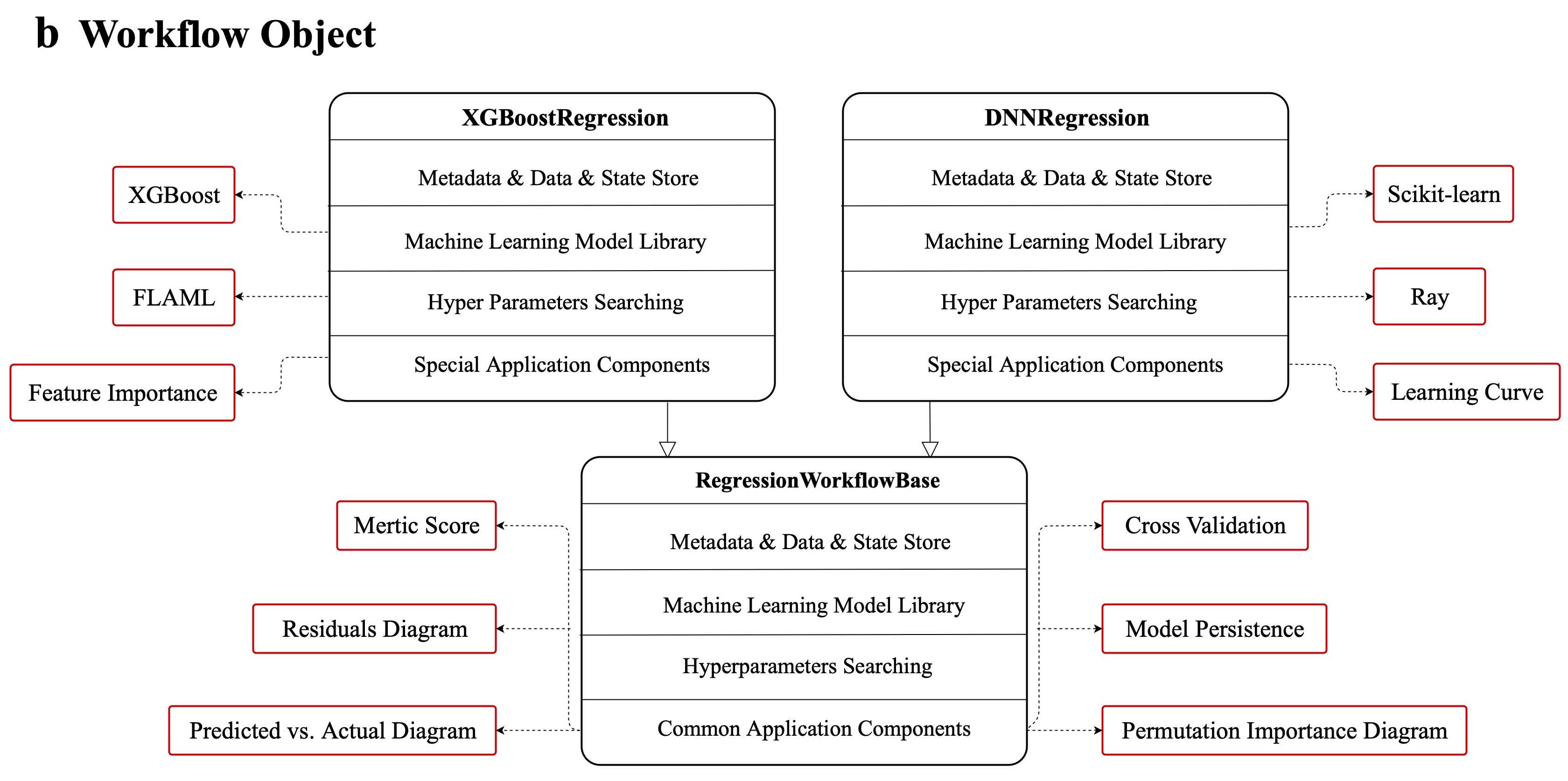

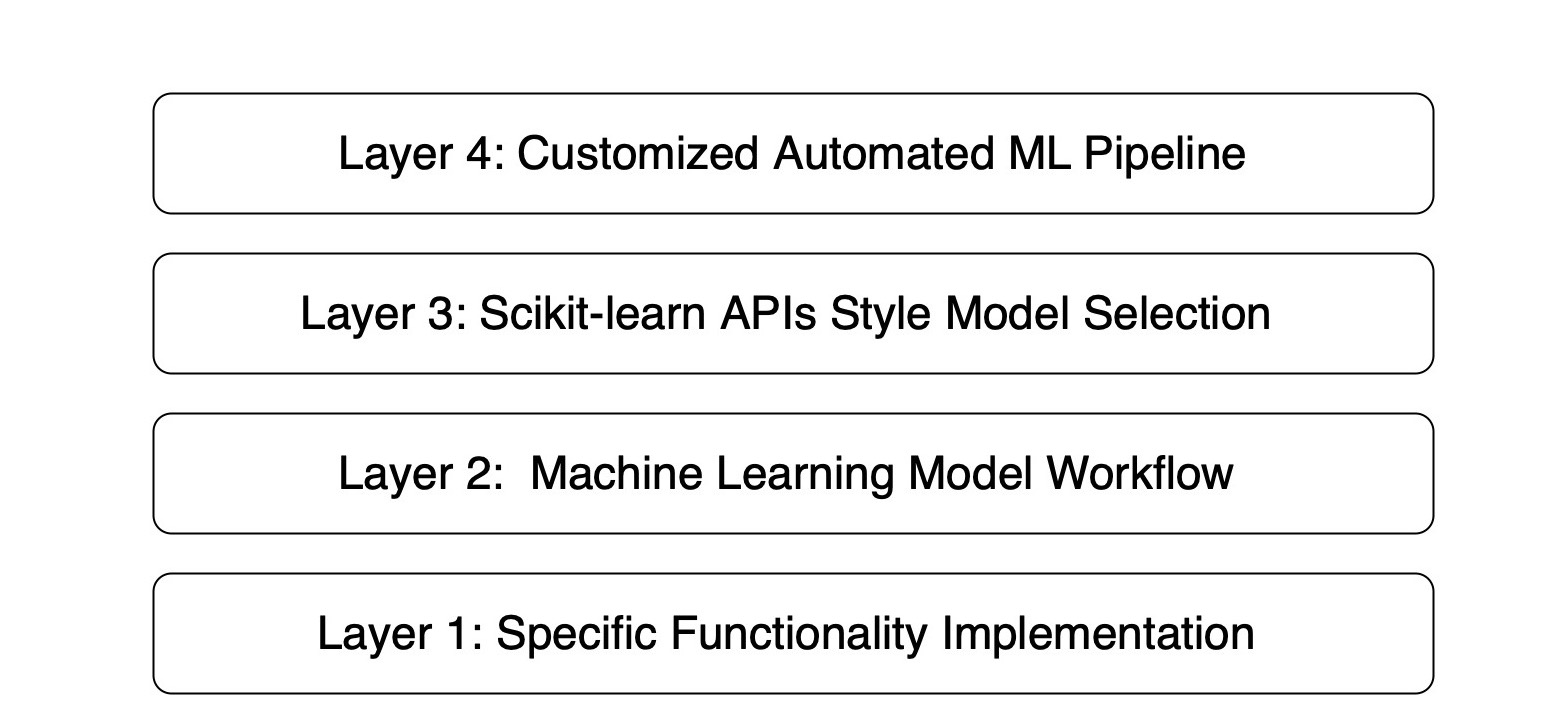

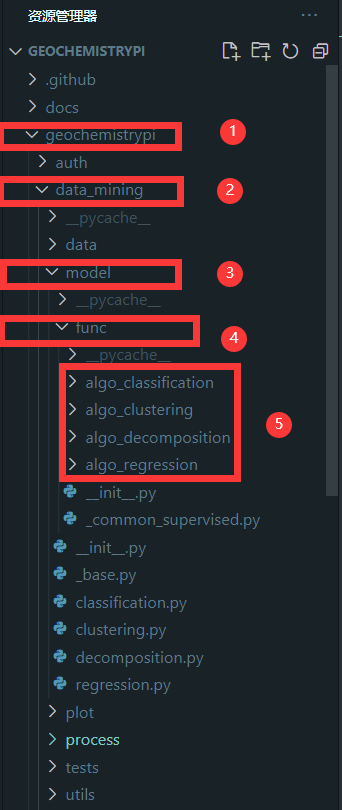

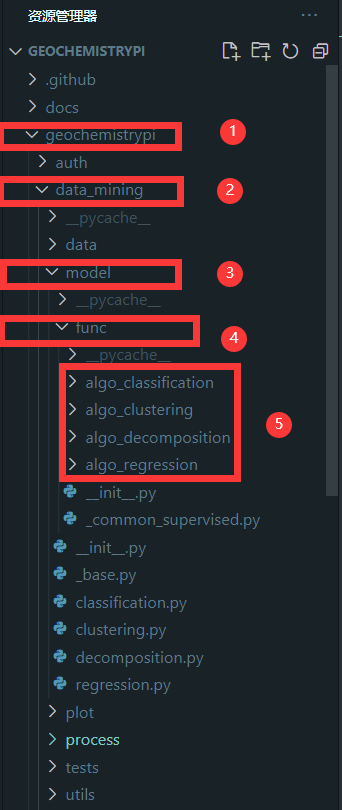

+The framework is a four-layer hierarchical pipeline architecture that promotes the creation of workflow obiects through a set of model selection interfaces. The critical layers of this architecture are, as follows:

-- [2. Add Model](#2-add-model)

- - [2.1 Add The Model Class](#21-add-the-model-class)

- - [2.1.1 Find Add File](#211-find-add-file)

- - [2.1.2 Define class properties and constructors, etc.](#212-define-class-properties-and-constructors-etc)

- - [2.1.3 Define manual\_hyper\_parameters](#213-define-manual_hyper_parameters)

- - [2.1.4 Define special\_components](#214-define-special_components)

+1. Layer 1: the realization of ML model-associated functionalities with specific dependencies or libraries.

+2. Layer 2: the abstract components of the ML model workflow class include regression, classification, clustering, and decomposition.

+3. Layer 3: the scikit-learn API-style model selection interface implements the creation of ML model workflow objects.

+4. Layer 4: the customized automated ML pipeline operated at the command line or through a web interface with a complete data-mining process.

- - [2.2 Add AutoML](#22-add-automl)

- - [2.2.1 Add AutoML code to class](#221-add-automl-code-to-class)

+

+  +

+

- - [2.3 Get the hyperparameter value through interactive methods](#23-get-the-hyperparameter-value-through-interactive-methods)

- - [2.3.1 Find file](#231-find-file)

- - [2.3.2 Create the .py file and add content](#232-create-the-py-file-and-add-content)

- - [2.3.3 Import in the file that defines the model class](#233-import-in-the-file-that-defines-the-model-class)

+This pattern-driven architecture offers developers a standardized and intuitive way to create a ML model workflow class in Layer 2 by using a unified and consistent approach to object creation in Layer 3. Furthermore, it ensures the interchangeability of different model applications, allowing for seamless transitions between methodologies in Layer 1.

- - [2.4 Call Model](#24-call-model)

- - [2.4.1 Find file](#241-find-file)

- - [2.4.2 Import module](#242-import-module)

- - [2.4.3 Call model](#243-call-model)

+ - - [2.5 Add the algorithm list and set NON\_AUTOML\_MODELS](#25-add-the-algorithm-list-and-set-non_automl_models)

- - [2.5.1 Find file](#251-find-file)

+The code of each layer lies as shown above.

- - [2.6 Add Functionality](#26-add-functionality)

+**Notice**: in our framework, a **model workflow class** refers to an **algorithm workflow class** and a **mode** includes multiple model workflow classes.

- - [2.6.1 Model Research](#261-model-research)

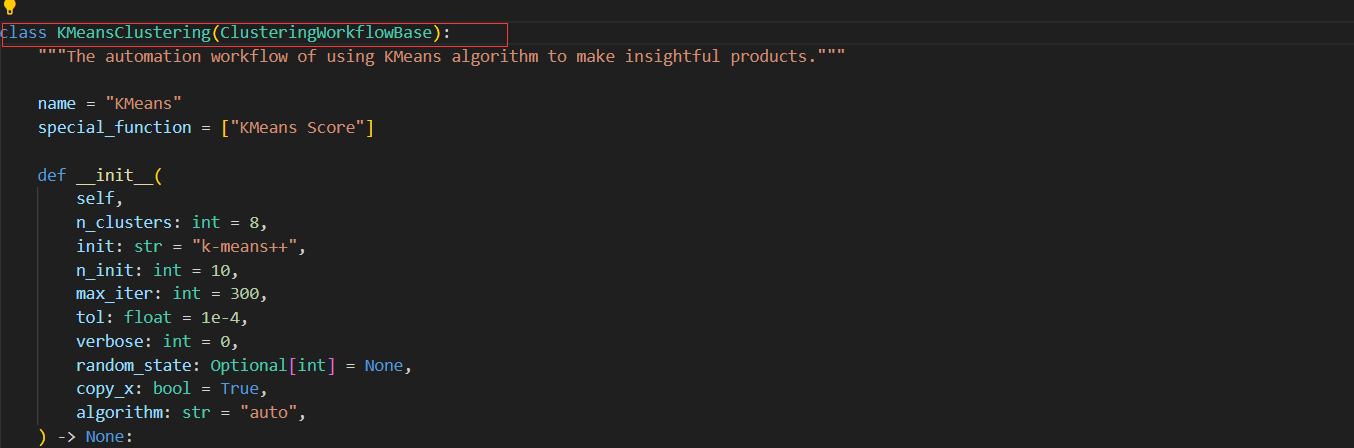

+Now, we will take KMeans algorithm as an example to illustrate the connection between each layer. Don't get too hung up on ths part. Once you finish reading the whole article, you can come back to here again.

- - [2.6.2 Add Common_component](#262-add-common_component)

+After reading this article, you are recommended to refer to this publication also for more details on the whole scope of our framework:

- - [2.6.3 Add Special_component](#263-add-special_component)

+https://agupubs.onlinelibrary.wiley.com/doi/10.1029/2023GC011324

-- [3. Test model](#3-test-model)

-- [4. Completed Pull Request](#4-completed-pull-request)

+## 2. Understand Machine Learning Algorithm

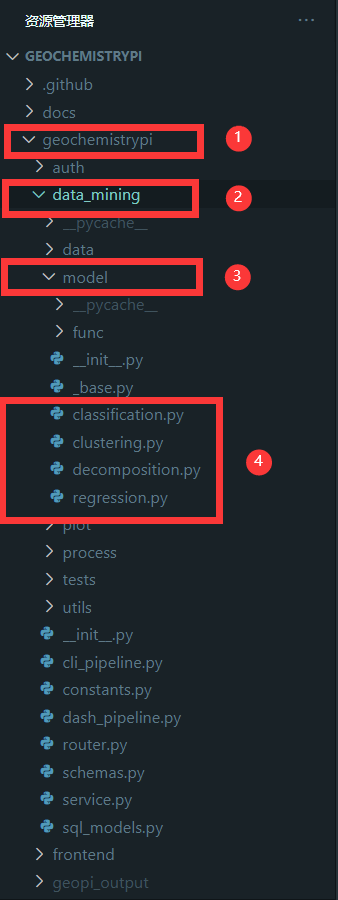

+You need to understand the general meaning of the machine learning algorithm you are responsible for. Then you encapsultate it as an algorithm workflow in our framework and put it under the directory `geochemistrypi/data_mining/model`. Then you need to determine which **mode** this algorithm belongs to and the role of each parameter. For example, linear regression algorithm belongs to regression mode in our framework.

-- [5. Precautions](#5-precautions)

++ When learning the ML algorithm, you can refer to the relevant knowledge on the [scikit-learn official website](https://scikit-learn.org/stable/index.html).

-## 1. Understand the model

-You need to understand the general meaning of the model, determine which algorithm the model belongs to and the role of each parameter.

-+ You can choose to learn about the relevant knowledge on the [scikit-learn official website](https://scikit-learn.org/stable/index.html).

+## 3. Construct Model Workflow Class

+**Noted**: You can reference any existing model workflow classes in our framework to implement your own model workflow class.

-## 2. Add Model

-### 2.1 Add The Model Class

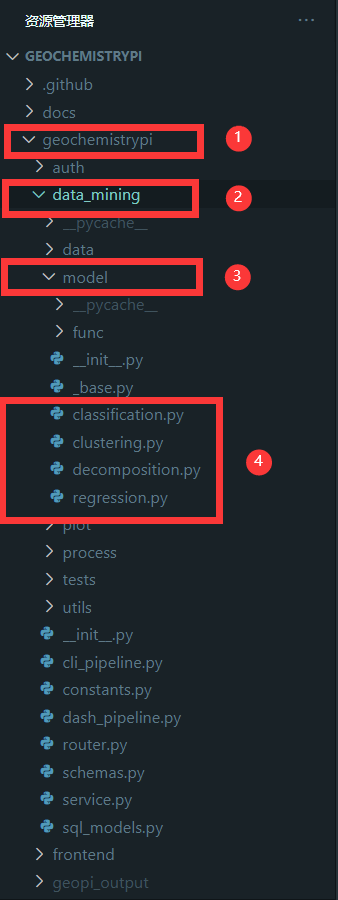

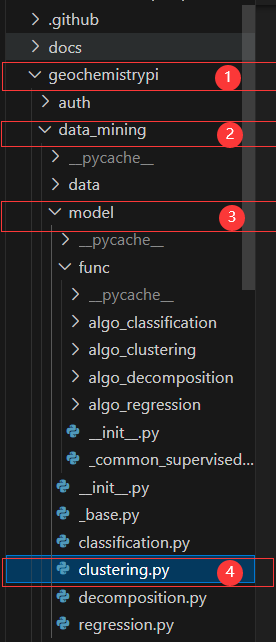

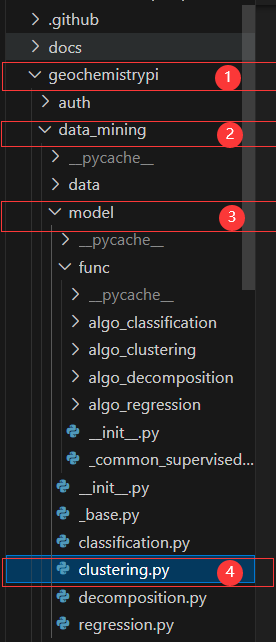

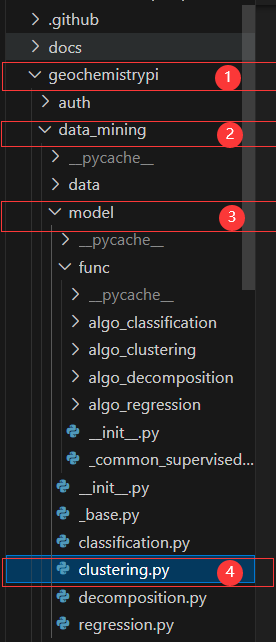

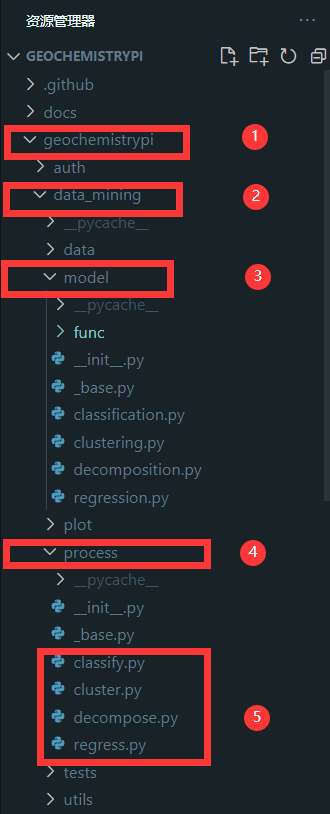

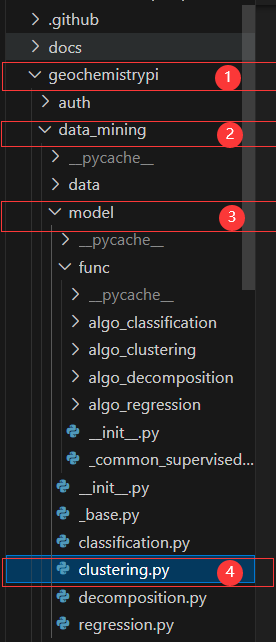

-#### 2.1.1 Find Add File

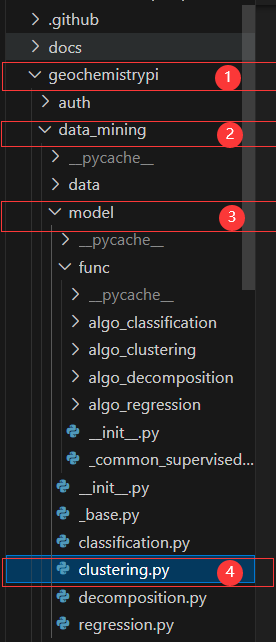

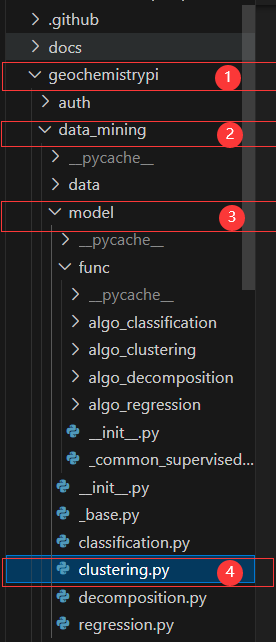

-First, you need to define the model class that you need to complete in the corresponding algorithm file. The corresponding algorithm file is in the `model` folder in the `data_mining` folder in the `geochemistrypi` folder.

+### 3.1 Add Basic Elements

+

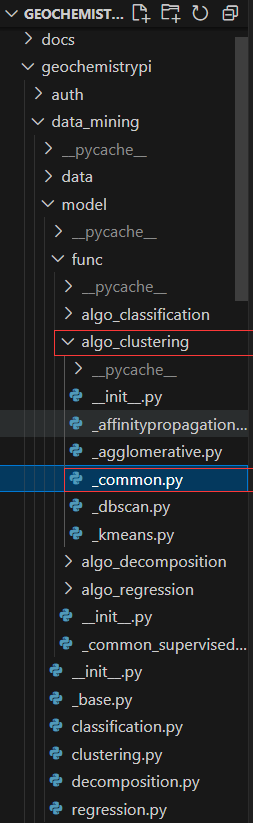

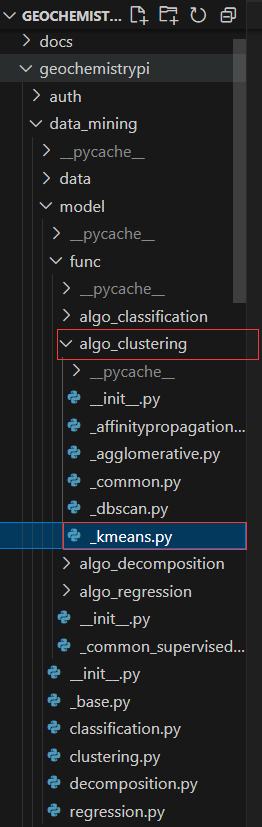

+#### 3.1.1 Find File

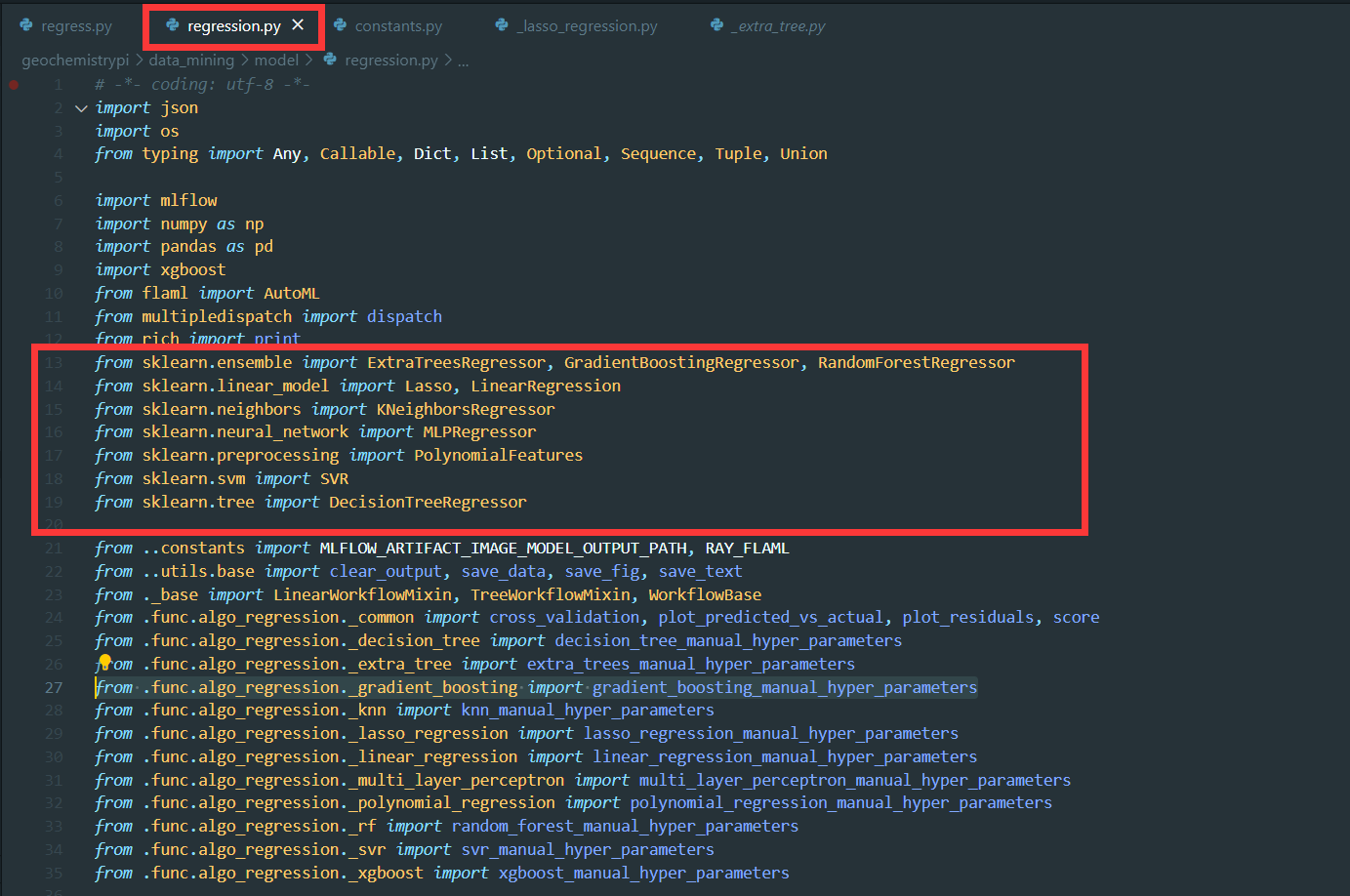

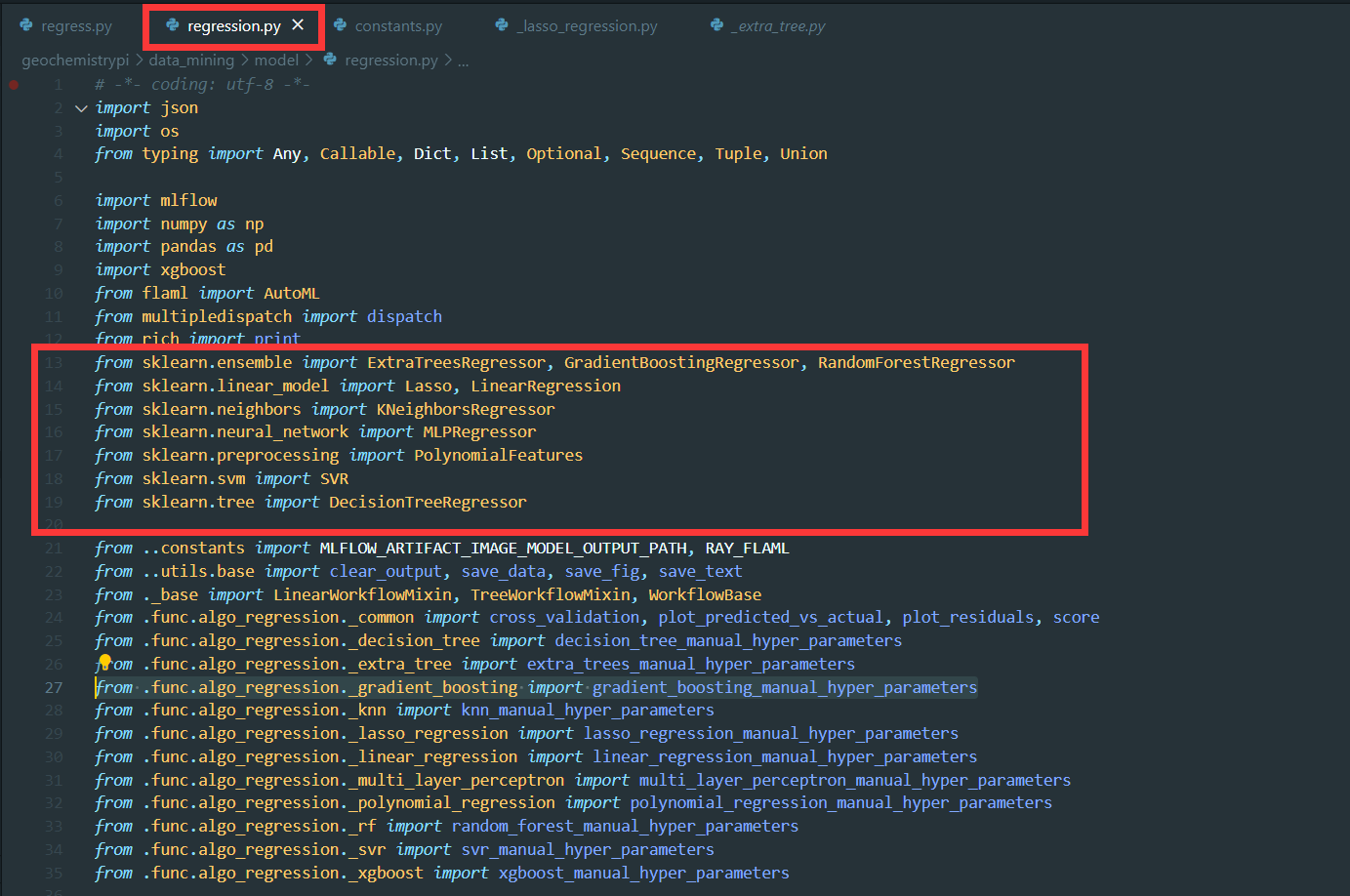

+First, you need to construct the algorithm workflow class in the corresponding model file. The corresponding model file locates under the path `geochemistrypi/data_mining/model`.

-**eg:** If you want to add a model to the regression algorithm, you need to add it in the `regression.py` file.

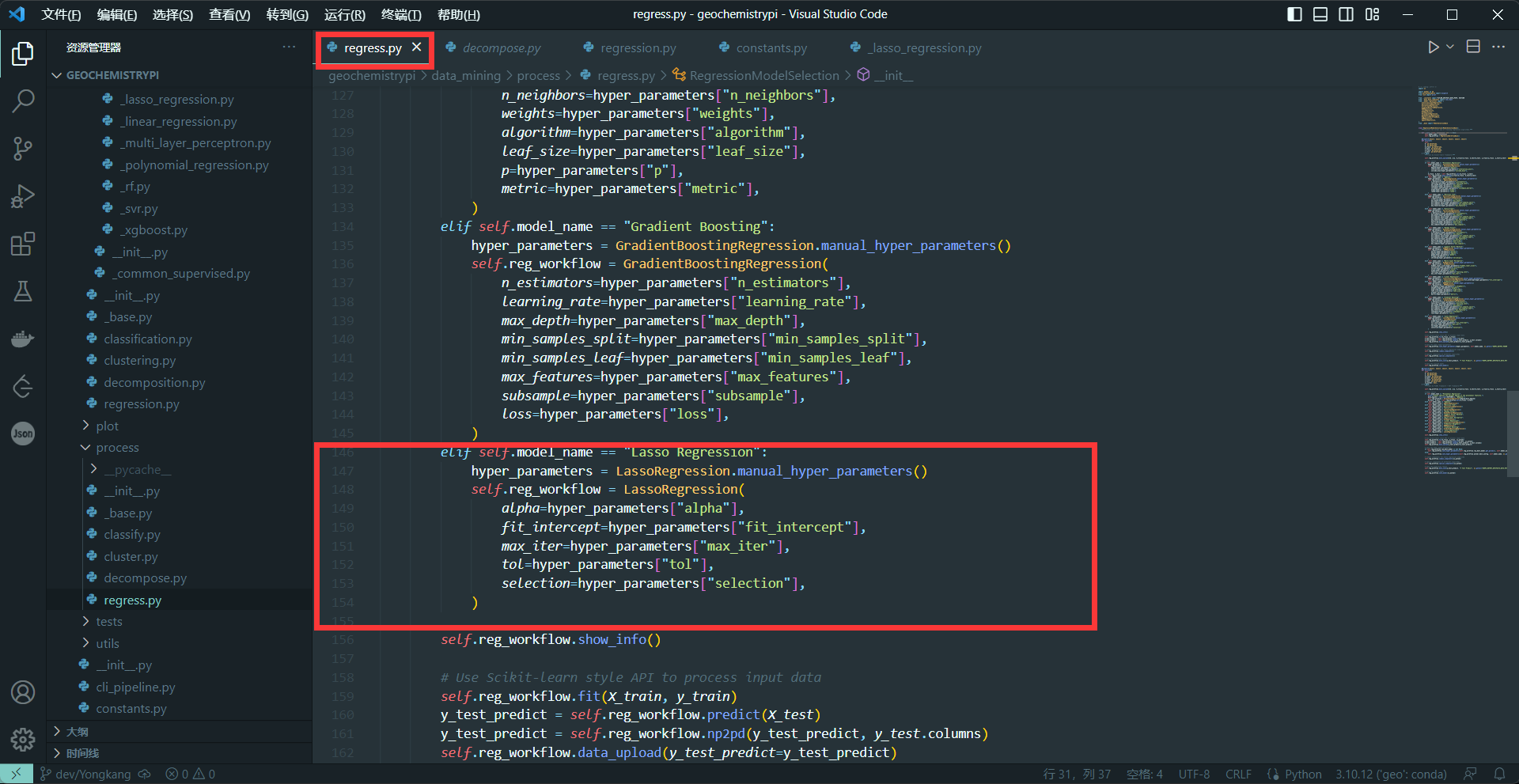

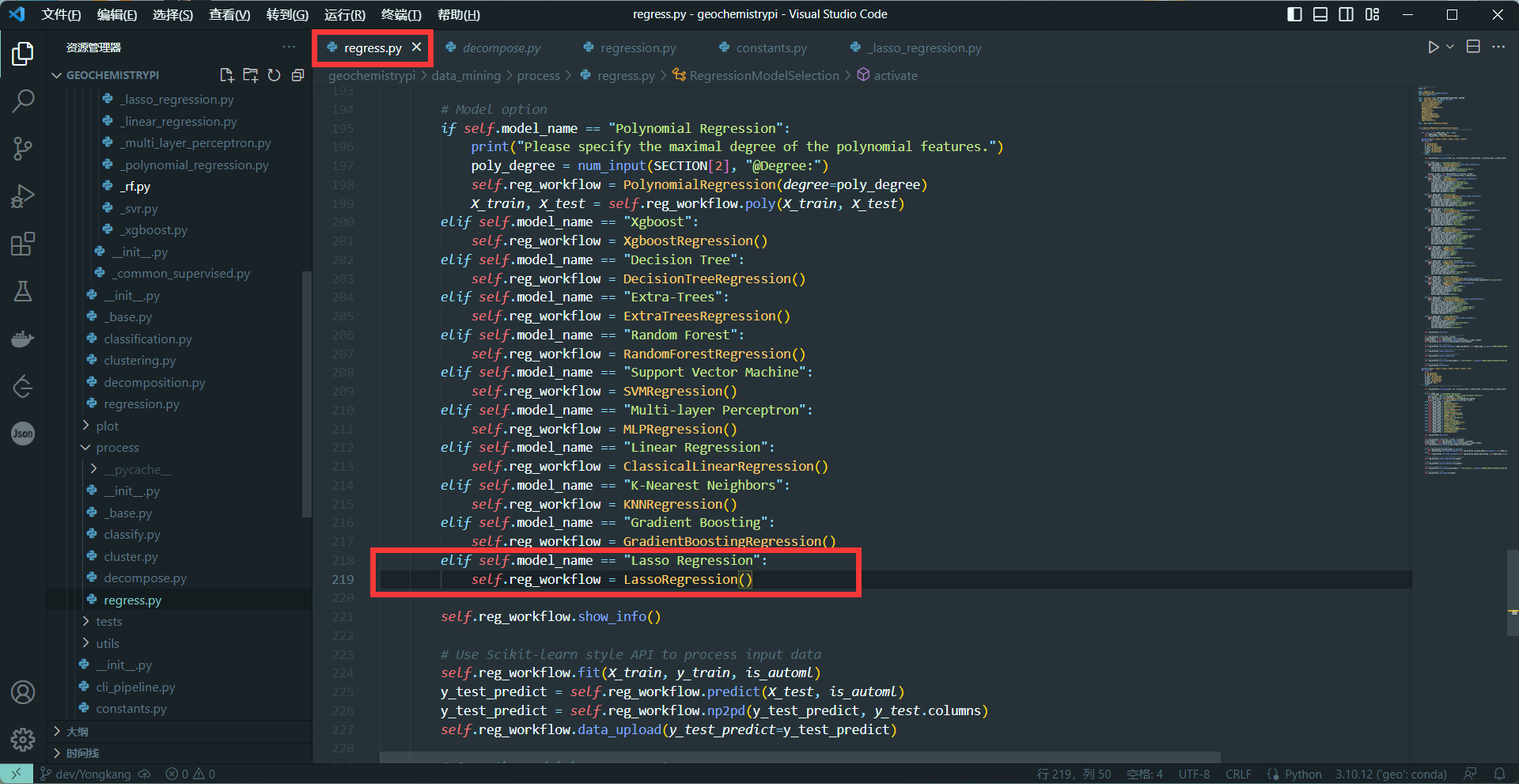

+**E.g.,** If you want to add a model for the regression mode, you need to add it in the `regression.py` file.

+

+#### 3.1.2 Define Class Attributes and Constructor

+

+(1) Define the algorithm workflow class and its base class

-#### 2.1.2 Define class properties and constructors, etc.

-(1) Define the class and the required Base class

```

-class NAME (Base class):

+class ModelWorkflowClassName(BaseModelWorkflowClassName):

```

-+ NAME is the name of the algorithm, you can refer to the NAME of other models, the format needs to be consistent.

-+ Base class needs to be selected according to the actual algorithm requirements.

++ You can refer to the ModelName of other models, the format (Upper case and with the suffix 'Corresponding Mode') needs to be consistent. E.g., `XGBoostRegression`.

++ Base class needs to be inherited according to the mode the model belongs to.

```

-"""The automation workflow of using "Name" to make insightful products."""

+"""The automation workflow of using "ModelWorkflowClassName" algorithm to make insightful products."""

```

-+ Class explanation, you can refer to other classes.

++ Class docstring, you can refer to other classes. The template is shown above.

-(2) Define the name and the special_function

+(2) Define the class attributes `name`

```

-name = "name"

+name = "algorithm terminology"

```

-+ Define name, different from NMAE.

-+ This name needs to be added to the _`constants.py`_ file and the corresponding algorithm file in the `process` folder. Note that the names are consistent.

++ The class attributes `name` is different from ModelWorkflowClassName. E.g., the name `XGBoost` in `XGBoostRegression` model workflow class.

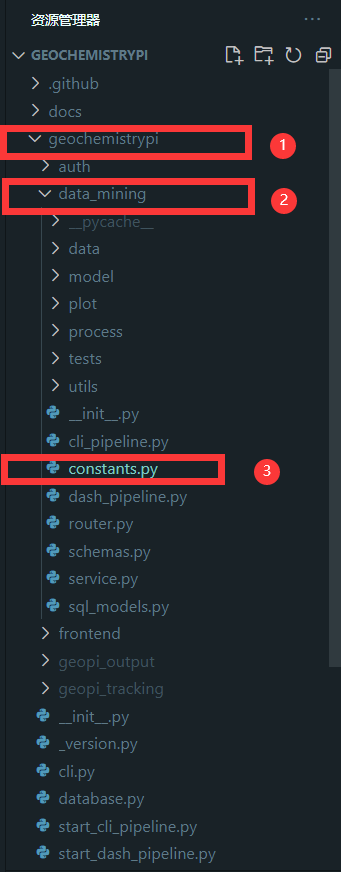

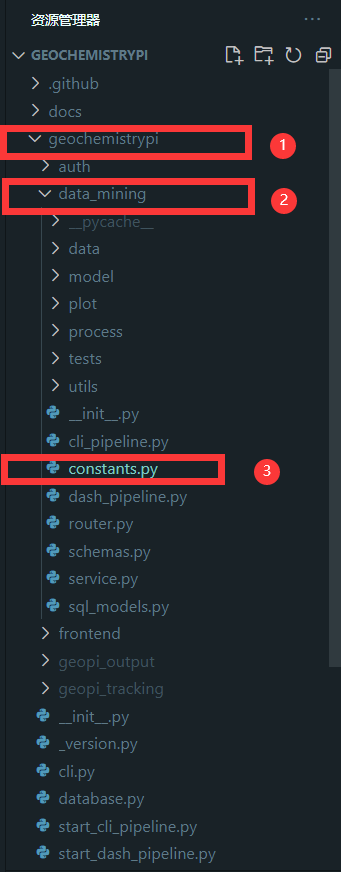

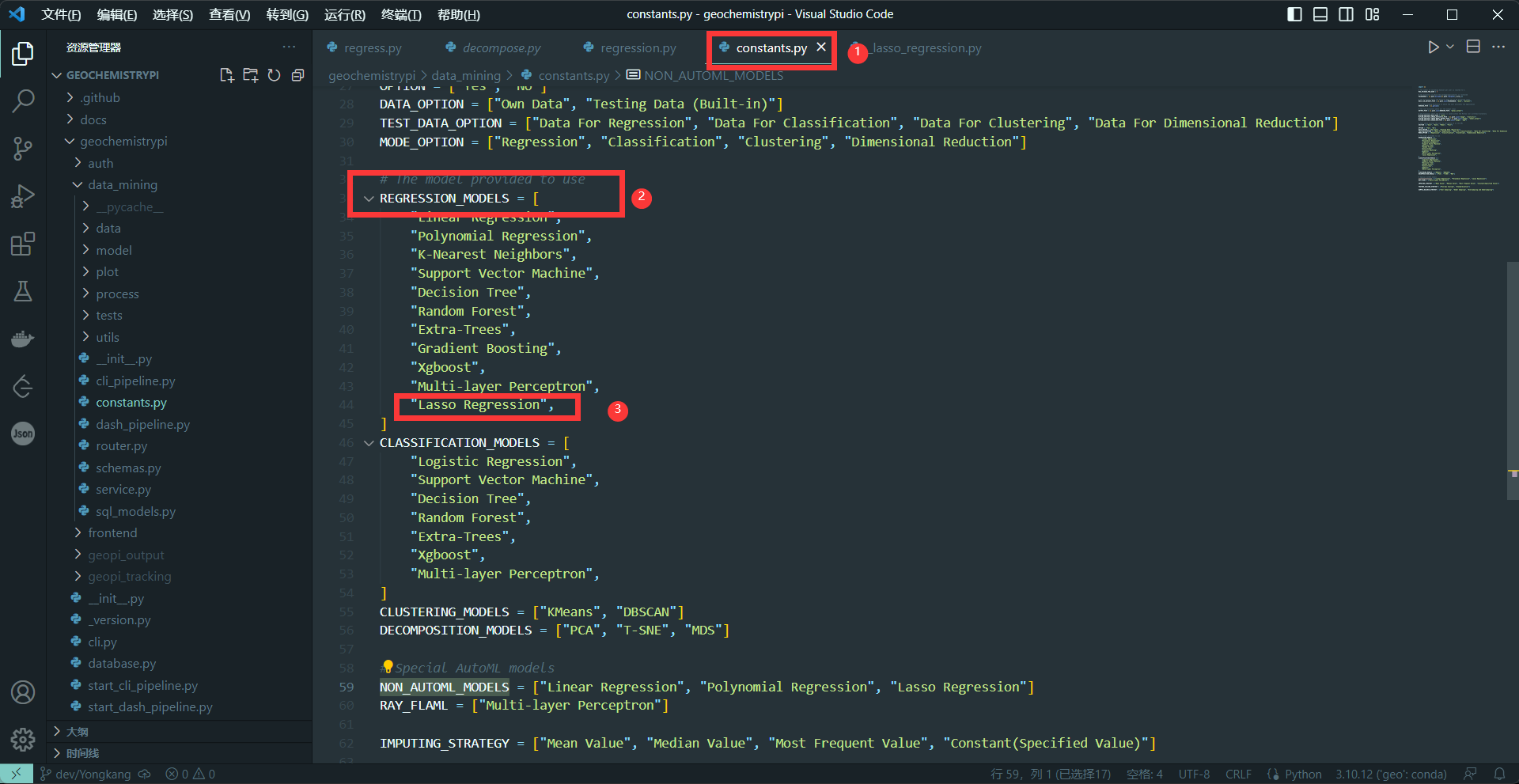

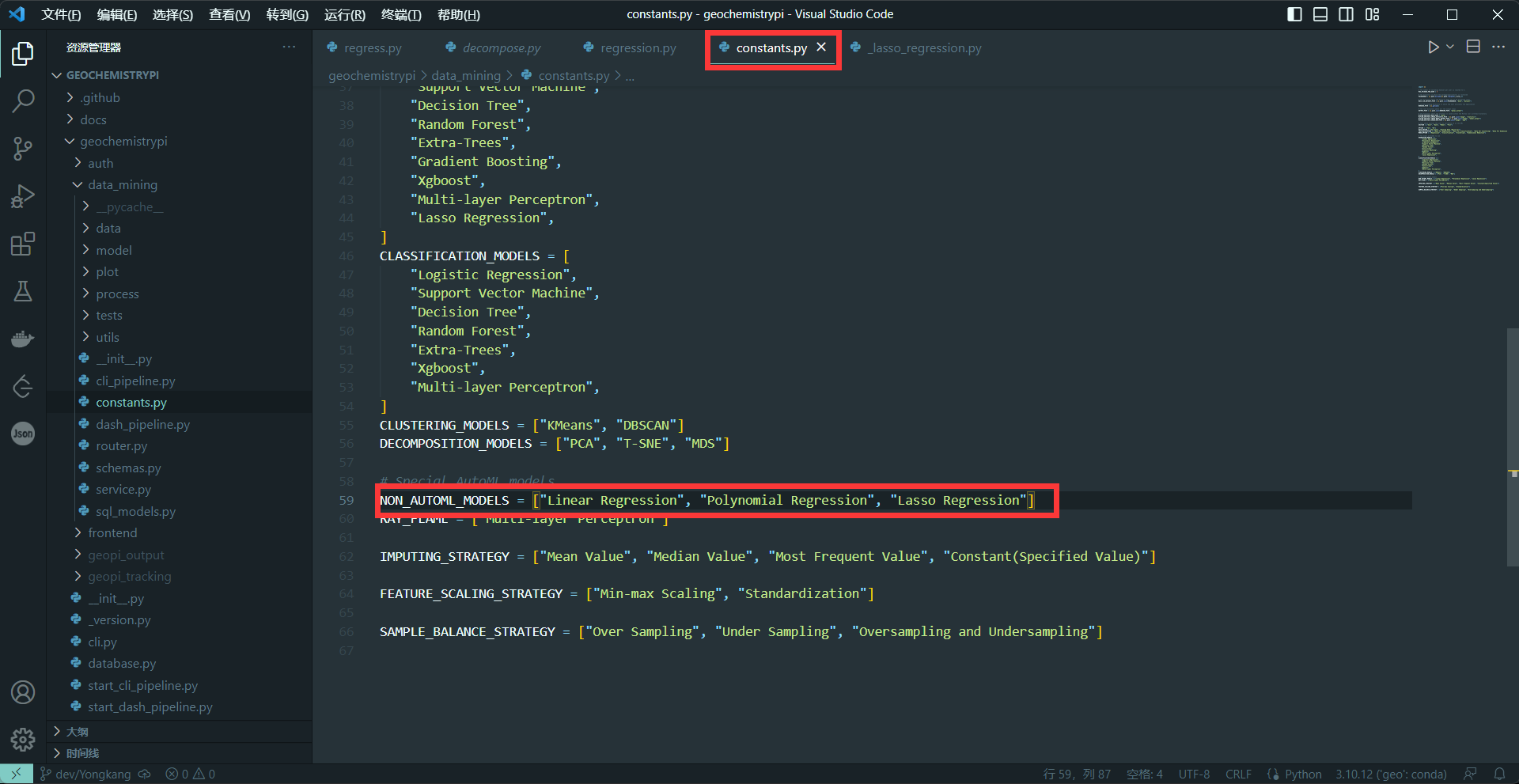

++ This name needs to be added to the corresponding constant variable in `geochemistrypi/data_mining/constants.py` file and the corresponding mode processing file under the `geochemistrypi/data_mining/process` folder. Note that those name value should be identical. It will be further explained in later section.

++ For example, the name value `XGBoost` should be included in the constant varible `REGRESSION_MODELS` in `geochemistrypi/data_mining/constants.py` file and it will be use in `geochemistrypi/data_mining/process/regress.py`.

+

+(3) Define the class attrbutes `common_functiion` or `special_function`

+

+If this model workflow class is a base class, you need to define the class attrbutes `common_functiion`. For example, the class attrbutes `common_functiion` in the base workflow class `RegressionWorkflowBase`.

+

+The values of `common_functiion` are the description of the functionalities of the models belonging to the same mode. It means the children class (all regession models) can share the same common functionalies as well.

+

+```

+common_functiion = []

+```

+

+If this model workflow class is a specific model workflow class, you need to define the class attrbutes `special_function`. For example, the class attrbutes `special_function` in the model workflow class `XGBoostRegression`.

+

+The values of `special_function` are the description of the owned functionalities of that specific model. Those special functions cannot be reused by other models.

+

```

special_function = []

```

-+ special_function is added according to the specific situation of the model, you can refer to other similar models.

-(3) Define constructor

+More detail will be explained in the later section.

+

+(4) Define the signature of the constructor

```

def __init__(

self,

- parameter:type=Default parameter value,

+ parameter: type = Default parameter value,

) -> None:

```

-+ All parameters in the corresponding model function need to be written out.

-+ Default parameter value needs to be set according to official documents.

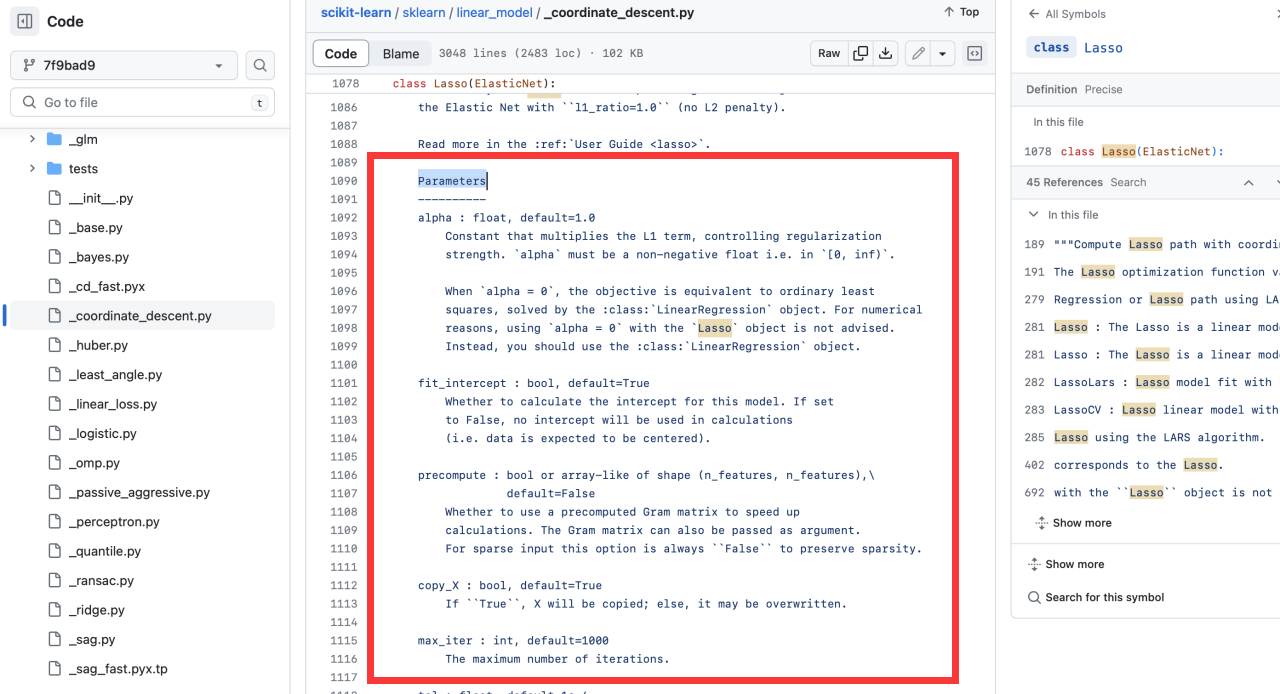

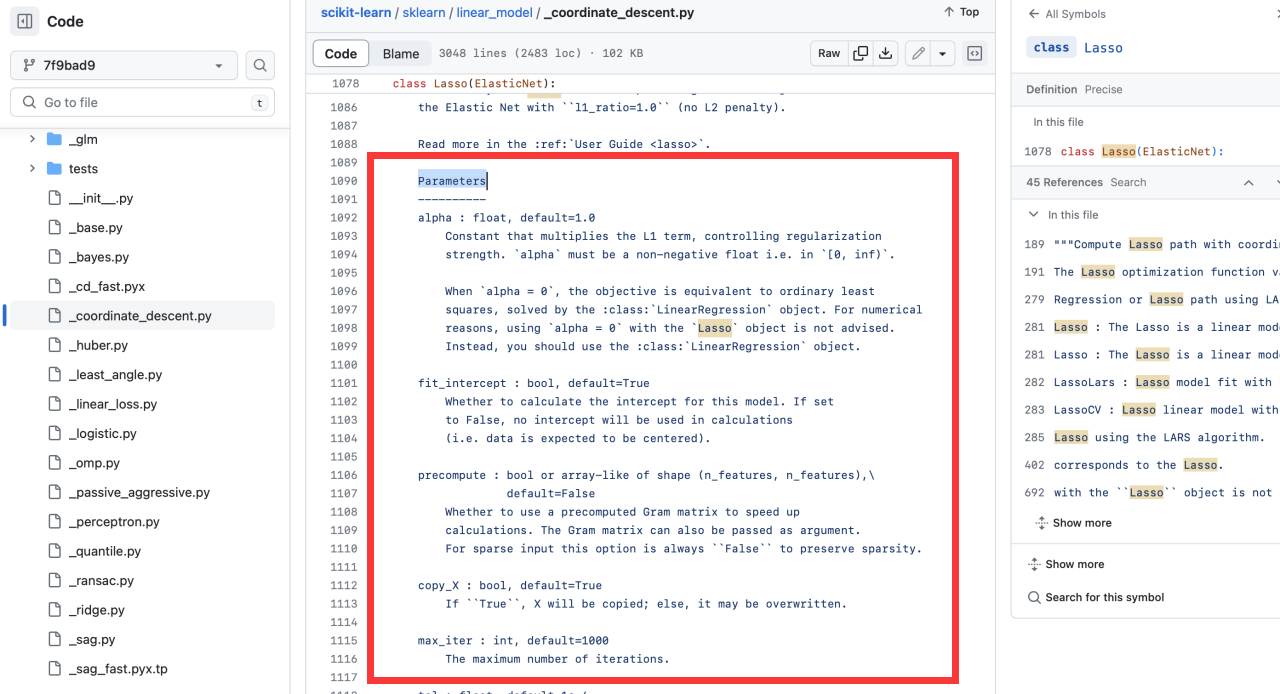

++ The parameters in the constructor is from the algorithm library you depend on. For example, you use **Lasso** algorithm from Sckit-learn library. You can reference its introduction ([Lasso](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html)) in Scikit-learn website.

++ Default parameter value needs to be set according to scikit-learn official documents also.

+

+

+

```

- """

+"""

Parameters

----------

-parameter:type,default = Dedault

+parameter: type,default = Dedault

References

----------

Scikit-learn API: sklearn.model.name

https://scikit-learn.org/......

```

-+ Parameters is in the source of the corresponding model on the official website of sklearn

-

-**eg:** Take the [Lasso algorithm](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html) as a column.

-

-

++ Parameters docstring are in the source code of the corresponding algorithm on the official website of sklearn.

-+ References is your model's official website.

-(4) The constructor of Base class is called

+(5) The constructor of Base class is called

```

super().__init__()

```

-(5) Initializes the instance's state by assigning the parameter values passed to the constructor to the instance's properties.

+

+(6) Initializes the instance's state by assigning the parameter values passed to the constructor to the instance's attributes.

```

self.parameter=parameter

```

-(6) Create the model and assign

+

+(7) Instantiate the algorithm class you depend on and assign. For example, `Lasso` from the library `sklearn.linear_model`.

```

-self.model=modelname(

+self.model = modelname(

parameter=self.parameter

)

```

-**Note:** Don't forget to import Model from scikit-learn

+**Note:** Don't forget to import the class from scikit-learn library

-(7) Define other class properties

+(8) Define the instance attribute `naming`

```

-self.properties=...

-```

-

-#### 2.1.3 Define manual_hyper_parameters

-manual_hyper_parameters gets the hyperparameter value by calling the manual hyperparameter function, and returns hyper_parameters.

+self.naming = Class.name

```

-hyper_parameters = name_manual_hyper_parameters()

-```

-+ This function calls the corresponding function in the `func` folder (needs to be written, see 2.2.2) to get the hyperparameter value.

-

-+ This function is called in the corresponding file of the `Process` folder (need to be written, see 2.3).

-+ Can be written with reference to similar classes

-

+This one will be use to print the name of the class and to activate the AutoML functionality. E.g, `self.naming = LassoRegression.name`. Further explaination is in section 2.2.

-#### 2.1.4 Define special_components

-Its purpose is to Invoke all special application functions for this algorithms by Scikit-learn framework.

-**Note:** The content of this part needs to be selected according to the actual situation of your own model.Can refer to similar classes.

-

-```

-GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH")

-```

-+ This line of code gets the image model output path from the environment variable.

-```

-GEOPI_OUTPUT_ARTIFACTS_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_PATH")

+(9) Define the instance attribute `customized` and `customized_name`

```

-+ This line of code takes the general output artifact path from the environment variable.

-**Note:** You need to choose to add the corresponding path according to the usage in the following functions.

-

-### 2.2 Add AutoML

-#### 2.2.1 Add AutoML code to class

-(1) Set AutoML related parameters

-```

- @property

- def settings(self) -> Dict:

- """The configuration of your model to implement AutoML by FLAML framework."""

- configuration = {

- "time_budget": '...'

- "metric": '...',

- "estimator_list": '...'

- "task": '...'

- }

- return configuration

+self.customized = True

+self.customized_name = "Algorithm Name"

```

-+ "time_budget" represents total running time in seconds

-+ "metric" represents Running metric

-+ "estimator_list" represents list of ML learners

-+ "task" represents task type

-**Note:** You can keep the parameters consistent, or you can modify them to make the AutoML better.

+These will be use to leverage the customization of AutlML functionality. E.g,`self.customized_name = "Lasso"`. Further explaination is in section 2.3.

-(2) Add parameters that need to be AutoML

-You can add the parameter tuning code according to the following code:

-```

- @property

- def customization(self) -> object:

- """The customized 'Your model' of FLAML framework."""

- from flaml import tune

- from flaml.data import 'TPYE'

- from flaml.model import SKLearnEstimator

- from sklearn.ensemble import 'model_name'

-

- class 'Model_Name'(SKLearnEstimator):

- def __init__(self, task=type, n_jobs=None, **config):

- super().__init__(task, **config)

- if task in 'TOYE':

- self.estimator_class = 'model_name'

-

- @classmethod

- def search_space(cls, data_size, task):

- space = {

- "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

- "'parameters2'": {"domain": tune.choice([True, False])},

- "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

- }

- return space

-

- return "Model_Name"

-```

-**Note1:** The content in ' ' needs to be modified according to your specific code

-**Note2:**

+(10) Define other instance attributes

```

- space = {

- "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

- "'parameters2'": {"domain": tune.choice([True, False])},

- "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

- }

+self.attributes=...

```

-+ tune.Uniform represents float

-+ tune.choice represents bool

-+ tune.randint represents int

-+ lower represents the minimum value of the range, upper represents the maximum value of the range, and init_value represents the initial value

-**Note:** You need to select parameters based on the actual situation of the model

-(3) Define special_components(FLAML)

-This part is the same as 2.1.4 as a whole, and can be modified with reference to it, but only the following two points need to be noted:

-a.The multi-dispatch function is different

-Scikit-learn framework:@dispatch()

-FLAML framework:@dispatch(bool)

+### 3.2 Add Manual Hyperparameter Tuning Functionality

-b.Added 'is_automl: bool' to the def

-**eg:**

-```

-Scikit-learn framework:

-def special_components(self, **kwargs) -> None:

+Our framework provides the user to set the algorithm hyperparameter manually or automiacally. In this part, we implement the manual functionality.

-FLAML framework:

-def special_components(self, is_automl: bool, **kwargs) -> None:

-```

-c.self.model has a different name

-**eg:**

-```

-Scikit-learn framework:

-coefficient=self.model.coefficient

-

-FLAML framework:

-coefficient=self.auto_model.coefficient

-```

+Sometimes the users want to input the hyperparameter values for model training manually, so you need to establish an interaction way to get the user's input.

-**Note:** You can refer to other similar codes to complete your code.

+#### 3.2.1 Define manual_hyper_parameters Method

-### 2.3 Get the hyperparameter value through interactive methods

-Sometimes the user wants to modify the hyperparameter values for model training, so you need to establish an interaction to get the user's modifications.

+The manual operation is control by the **manual_hyper_parameters** method. Inside this method, we encapsulate a lower level application function called algorithm_manual_hyper_parameters().

+```

+@classmethod

+def manual_hyper_parameters(cls) -> Dict:

+ """Manual hyper-parameters specification."""

+ print(f"-*-*- {cls.name} - Hyper-parameters Specification -*-*-")

+ hyper_parameters = algorithm_manual_hyper_parameters()

+ clear_output()

+ return hyper_parameters

+```

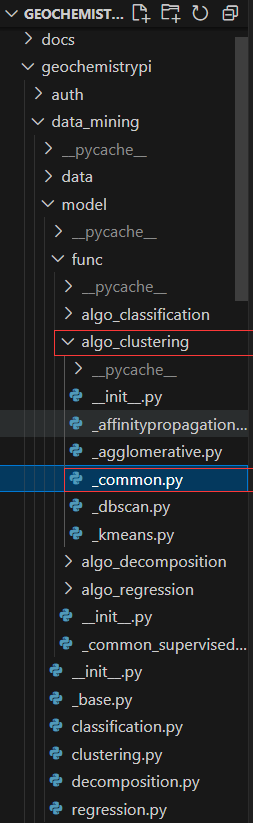

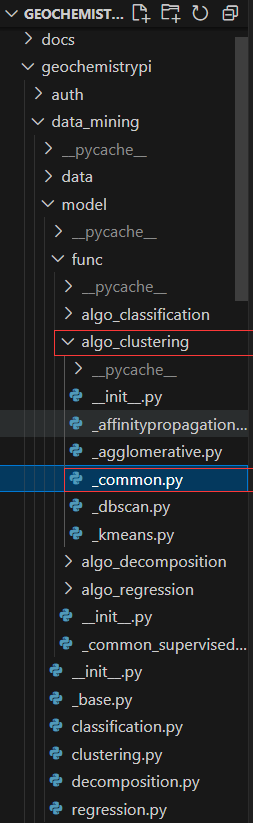

-#### 2.3.1 Find file

-You need to find the corresponding folder for model. The corresponding algorithm file is in the `func` folder in the model folder in the `data_mining` folder in the `geochemistrypi` folder.

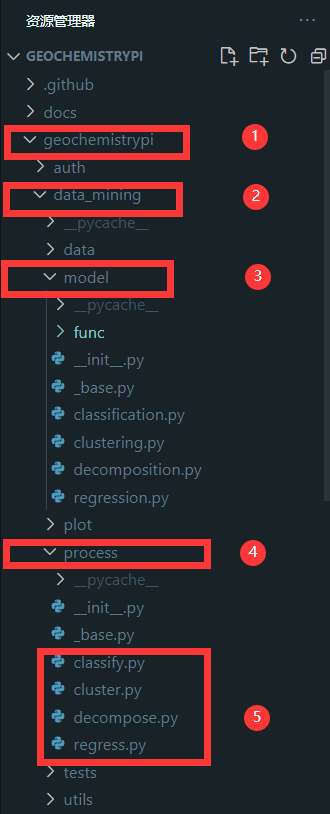

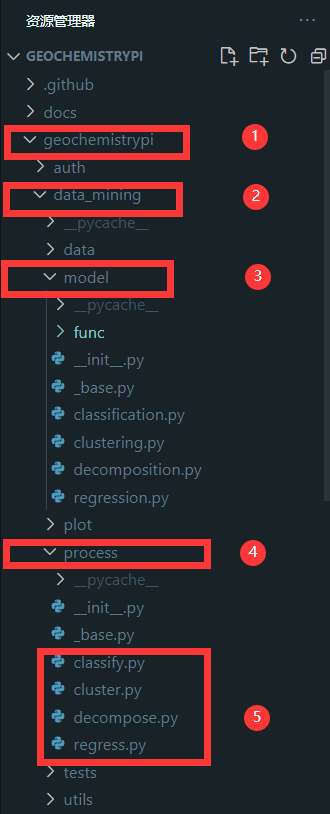

++ The **manual_hyper_parameters** method is called in the corresponding mode operation file under the `geochemistrypi/data_mining/process` folder.

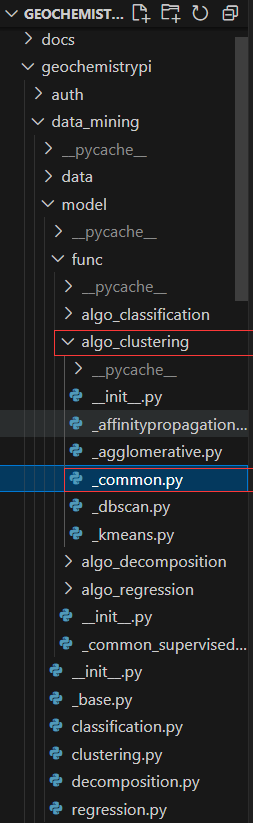

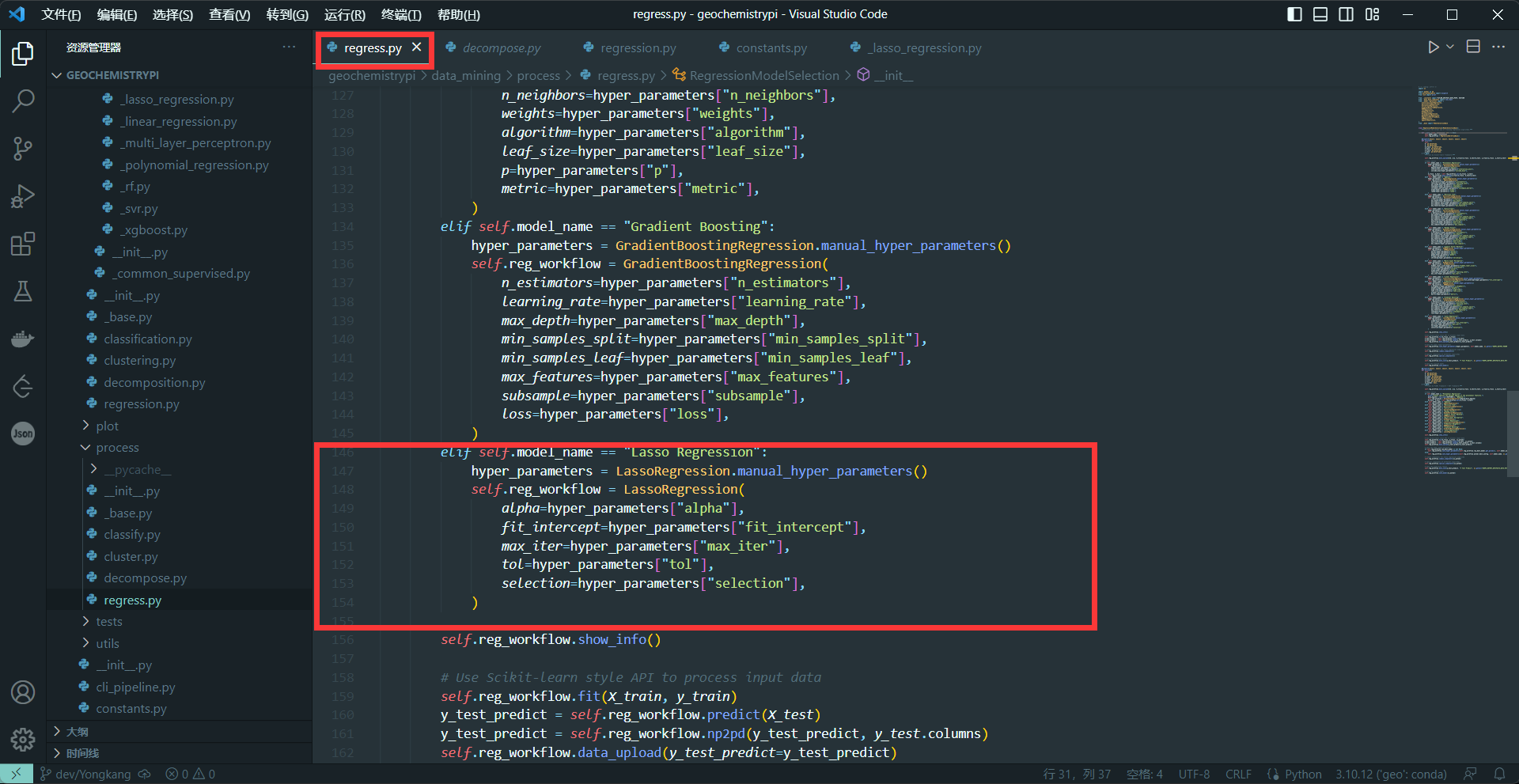

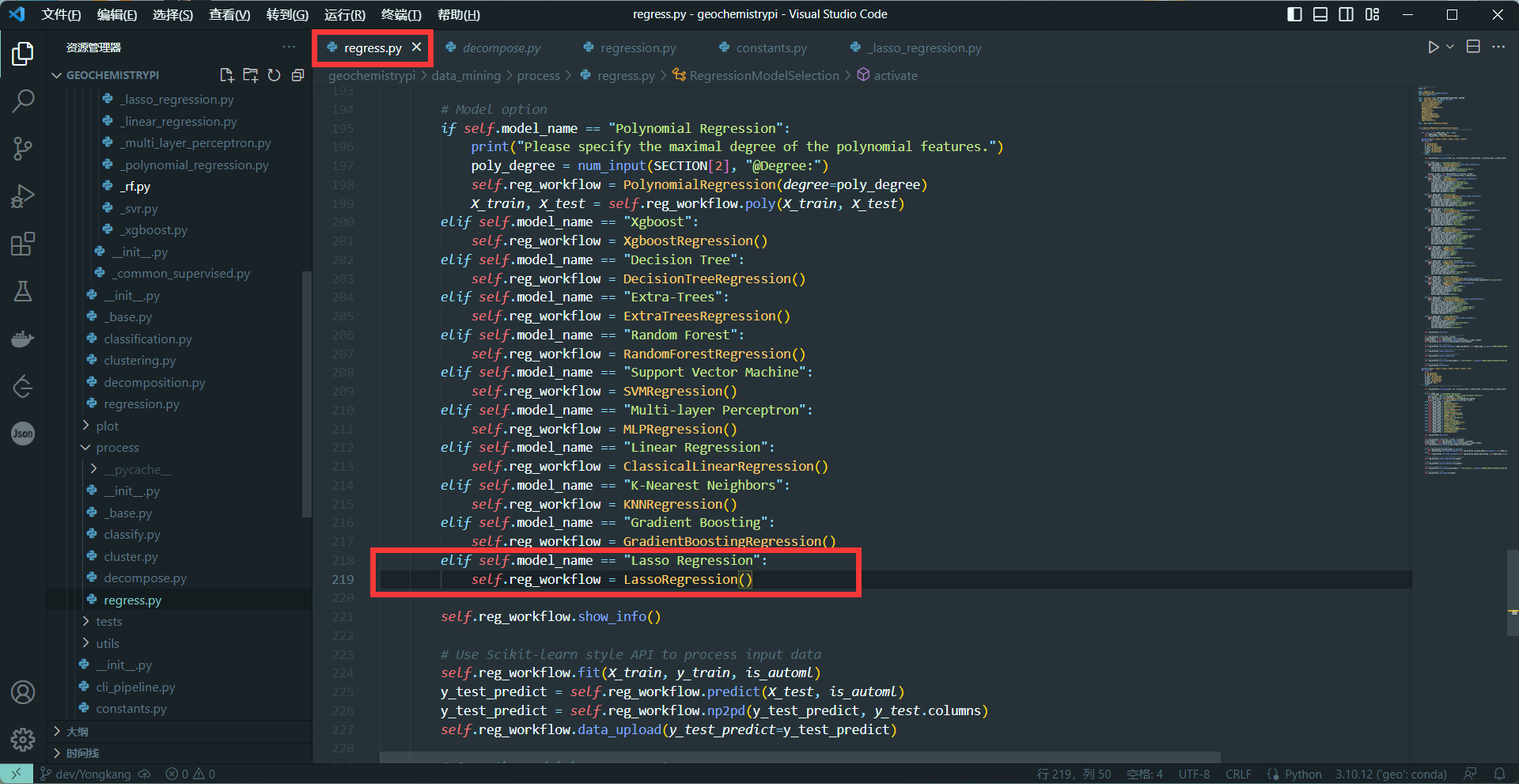

++ This lower level application function locates in the `geochemistrypi/data_mining/model/func/specific_mode` folder which limits the hyperparameters the user can set manually. E.g., If the model workflow class `LassoRegression` belongs to the regression mode, you need to add the `_lasso_regression.py` file under the folder `geochemistrypi/data_mining/model/func/algo_regression`. Here, `_lasso_regression.py` contains all encapsulated application functions specific to lasso algorithm.

-**eg:** If your model belongs to the regression, you need to add the `corresponding.py` file in the `alog_regression` folder.

+#### 3.2.2 Create `_algorithm.py` File

+(1) Create a _algorithm.py file

-#### 2.3.2 Create the .py file and add content

-(1) Create a .py file

**Note:** Keep name format consistent.

(2) Import module

@@ -283,395 +237,644 @@ from ....constants import SECTION

```

from ....data.data_readiness import bool_input, float_input, num_input

```

-+ This needs to choose the appropriate import according to the hyperparameter type of model interaction.

++ You needs to choose the appropriate common utility functions according to the input type of hyperparameter.

-(3) Define the function

+(3) Define the application function

```

-def name_manual_hyper_parameters() -> Dict:

+def algorithm_manual_hyper_parameters() -> Dict:

```

-**Note:** The name needs to be consistent with that in 2.1.3.

(4) Interactive format

```

-print("Hyperparameters: Role")

-print("Recommended value")

-Hyperparameters = type_input(Recommended value, SECTION[2], "@Hyperparameters: ")

+print("Hyperparameters: Explaination")

+print("A good starting value ...")

+Hyperparameters = type_input(Default Value, SECTION[2], "@Hyperparameters: ")

```

-**Note:** The recommended value needs to be the default value of the corresponding package.

+**Note:** You can query ChatGPT for the recommended good starting value. The default value can come from that one in the imported library. For example, check the default value of the specific parameter for `Lasso` algorithm in [Scikit-learn Website](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html).

(5) Integrate all hyperparameters into a dictionary type and return.

```

hyper_parameters = {

- "Hyperparameters1": Hyperparameters1,

- "Hyperparameters": Hyperparameters2,

+ "Hyperparameters1": Hyperparameters1,

+ "Hyperparameters": Hyperparameters2,

}

retuen hyper_parameters

```

-#### 2.3.3 Import in the file that defines the model class

+#### 3.2.3 Import in The Model Workflow Class File

```

-from .func.algo_regression.Name import name_manual_hyper_parameters

+from .func.algo_mode._algorithm.py import algorithm_manual_hyper_parameters

```

-**eg:**

-### 2.4 Call Model

-#### 2.4.1 Find file

-Call the model in the corresponding file in the `process` folder. The corresponding algorithm file is in the `process` folder in the` model` folder in the `data_mining` folder in the `geochemistrypi` folder.

+### 3.3 Add Automated Hyperparameter Tuning (AutoML) Functionality

-

+#### 3.3.1 Add AutoML Code to Model Workflow Class

-**eg:** If your model belongs to the regression,you need to call it in the regress.py file.

+Currently, only supervised learning modes (regression and classification) support AutoML. Hence, only the algorithm belonging to these two modes need to implment AutoML functionality.

-#### 2.4.2 Import module

-You need to add your model in the from ..model.regression import().

-```

-from ..model.regression import(

- ...

- NAME,

-)

-```

-**Note:** NAME needs to be the same as the NAME when defining the class in step 2.1.2.

-**eg:**

+Our framework leverages FLAML + Ray to build the AutoML functionality. For some algorithms, FLAML has encapsulated them. Hence, it is easy to operate with those built-in algorithm. However, some algorithms without encapsulation needs our customization on our own.

-

+There are three cases in total:

++ C1: Encapsulated -> FLAML (Good example: `XGBoostRegression` in `regression.py`)

++ C2: Unencapsulated -> FLAML (Good example: `SVMRegression` in `regression.py`)

++ C3: Unencapsulated -> FLAML + RAY (Good example: `MLPRegression` in `regression.py`)

-#### 2.4.3 Call model

-There are two activate methods defined in the Regression and Classification algorithms, the first method uses the Scikit-learn framework, and the second method uses the FLAML and RAY frameworks. Decomposition and Clustering algorithms only use the Scikit-learn framework. Therefore, in the call, Regression and Classification need to add related codes to implement the call in both methods, and only one time is needed in Clustering and Decomposition.

+Here, we only talk about 2 cases, C1 and C2. C3 is a special case and it is only implemented in MLP algorithm.

-(1) Call model in the first activate method(Including Classification, Regression,Decomposition,Clustering)

-```

-elif self.model_name == "name":

- hyper_parameters = NAME.manual_hyper_parameters()

- self.dcp_workflow = NAME(

- Hyperparameters1=hyper_parameters["Hyperparameters2"],

- Hyperparameters1=hyper_parameters["Hyperparameters2"],

- ...

- )

-```

-+ The name needs to be the same as the name in 2.4

-+ The hyperparameters in NAME() are the hyperparameters obtained interactively in 2.2

-**eg:**

-

+Noted:

-(2)Call model in the second activate method(Including Classification, Regression)

++ The calling method **fit** is defined in the base class, hence, no need to define it again in the specific model workflow class. You can refrence the **fit** method of `RegressionWorkflowBase` in `regression.py`

+

+The following two steps is needed to implement AutoML functionality in the model workflow class. But for C1 it only requires the first step while C2 needs two step both.

+

+(1) Create `settings` method

```

-elif self.model_name == "name":

- self.reg_workflow = NAME()

+@property

+def settings(self) -> Dict:

+ """The configuration of your model to implement AutoML by FLAML framework."""

+ configuration = {

+ "time_budget": '...'

+ "metric": '...',

+ "estimator_list": '...'

+ "task": '...'

+ }

+ return configuration

```

-+ The name needs to be the same as the name in 2.4

-**eg:**

-

++ "time_budget" represents total running time in seconds

++ "metric" represents Running metric

++ "estimator_list" represents list of ML learners

++ "task" represents task type

-### 2.5 Add the algorithm list and set NON_AUTOML_MODELS

+For C1, the value of "estimator_list" should come from the specified name in [FLAML library](https://microsoft.github.io/FLAML/docs/Use-Cases/Task-Oriented-AutoML). For example, the specified name `xgboost` in the model workflow class `XGBoostRegression`. Also we need to put this specified value inside a list.

-#### 2.5.1 Find file

-Find the constants file to add the model name,The constants file is in the `data_mining` folder in the `geochemistrypi` folder.

+

- - [2.5 Add the algorithm list and set NON\_AUTOML\_MODELS](#25-add-the-algorithm-list-and-set-non_automl_models)

- - [2.5.1 Find file](#251-find-file)

+The code of each layer lies as shown above.

- - [2.6 Add Functionality](#26-add-functionality)

+**Notice**: in our framework, a **model workflow class** refers to an **algorithm workflow class** and a **mode** includes multiple model workflow classes.

- - [2.6.1 Model Research](#261-model-research)

+Now, we will take KMeans algorithm as an example to illustrate the connection between each layer. Don't get too hung up on ths part. Once you finish reading the whole article, you can come back to here again.

- - [2.6.2 Add Common_component](#262-add-common_component)

+After reading this article, you are recommended to refer to this publication also for more details on the whole scope of our framework:

- - [2.6.3 Add Special_component](#263-add-special_component)

+https://agupubs.onlinelibrary.wiley.com/doi/10.1029/2023GC011324

-- [3. Test model](#3-test-model)

-- [4. Completed Pull Request](#4-completed-pull-request)

+## 2. Understand Machine Learning Algorithm

+You need to understand the general meaning of the machine learning algorithm you are responsible for. Then you encapsultate it as an algorithm workflow in our framework and put it under the directory `geochemistrypi/data_mining/model`. Then you need to determine which **mode** this algorithm belongs to and the role of each parameter. For example, linear regression algorithm belongs to regression mode in our framework.

-- [5. Precautions](#5-precautions)

++ When learning the ML algorithm, you can refer to the relevant knowledge on the [scikit-learn official website](https://scikit-learn.org/stable/index.html).

-## 1. Understand the model

-You need to understand the general meaning of the model, determine which algorithm the model belongs to and the role of each parameter.

-+ You can choose to learn about the relevant knowledge on the [scikit-learn official website](https://scikit-learn.org/stable/index.html).

+## 3. Construct Model Workflow Class

+**Noted**: You can reference any existing model workflow classes in our framework to implement your own model workflow class.

-## 2. Add Model

-### 2.1 Add The Model Class

-#### 2.1.1 Find Add File

-First, you need to define the model class that you need to complete in the corresponding algorithm file. The corresponding algorithm file is in the `model` folder in the `data_mining` folder in the `geochemistrypi` folder.

+### 3.1 Add Basic Elements

+

+#### 3.1.1 Find File

+First, you need to construct the algorithm workflow class in the corresponding model file. The corresponding model file locates under the path `geochemistrypi/data_mining/model`.

-**eg:** If you want to add a model to the regression algorithm, you need to add it in the `regression.py` file.

+**E.g.,** If you want to add a model for the regression mode, you need to add it in the `regression.py` file.

+

+#### 3.1.2 Define Class Attributes and Constructor

+

+(1) Define the algorithm workflow class and its base class

-#### 2.1.2 Define class properties and constructors, etc.

-(1) Define the class and the required Base class

```

-class NAME (Base class):

+class ModelWorkflowClassName(BaseModelWorkflowClassName):

```

-+ NAME is the name of the algorithm, you can refer to the NAME of other models, the format needs to be consistent.

-+ Base class needs to be selected according to the actual algorithm requirements.

++ You can refer to the ModelName of other models, the format (Upper case and with the suffix 'Corresponding Mode') needs to be consistent. E.g., `XGBoostRegression`.

++ Base class needs to be inherited according to the mode the model belongs to.

```

-"""The automation workflow of using "Name" to make insightful products."""

+"""The automation workflow of using "ModelWorkflowClassName" algorithm to make insightful products."""

```

-+ Class explanation, you can refer to other classes.

++ Class docstring, you can refer to other classes. The template is shown above.

-(2) Define the name and the special_function

+(2) Define the class attributes `name`

```

-name = "name"

+name = "algorithm terminology"

```

-+ Define name, different from NMAE.

-+ This name needs to be added to the _`constants.py`_ file and the corresponding algorithm file in the `process` folder. Note that the names are consistent.

++ The class attributes `name` is different from ModelWorkflowClassName. E.g., the name `XGBoost` in `XGBoostRegression` model workflow class.

++ This name needs to be added to the corresponding constant variable in `geochemistrypi/data_mining/constants.py` file and the corresponding mode processing file under the `geochemistrypi/data_mining/process` folder. Note that those name value should be identical. It will be further explained in later section.

++ For example, the name value `XGBoost` should be included in the constant varible `REGRESSION_MODELS` in `geochemistrypi/data_mining/constants.py` file and it will be use in `geochemistrypi/data_mining/process/regress.py`.

+

+(3) Define the class attrbutes `common_functiion` or `special_function`

+

+If this model workflow class is a base class, you need to define the class attrbutes `common_functiion`. For example, the class attrbutes `common_functiion` in the base workflow class `RegressionWorkflowBase`.

+

+The values of `common_functiion` are the description of the functionalities of the models belonging to the same mode. It means the children class (all regession models) can share the same common functionalies as well.

+

+```

+common_functiion = []

+```

+

+If this model workflow class is a specific model workflow class, you need to define the class attrbutes `special_function`. For example, the class attrbutes `special_function` in the model workflow class `XGBoostRegression`.

+

+The values of `special_function` are the description of the owned functionalities of that specific model. Those special functions cannot be reused by other models.

+

```

special_function = []

```

-+ special_function is added according to the specific situation of the model, you can refer to other similar models.

-(3) Define constructor

+More detail will be explained in the later section.

+

+(4) Define the signature of the constructor

```

def __init__(

self,

- parameter:type=Default parameter value,

+ parameter: type = Default parameter value,

) -> None:

```

-+ All parameters in the corresponding model function need to be written out.

-+ Default parameter value needs to be set according to official documents.

++ The parameters in the constructor is from the algorithm library you depend on. For example, you use **Lasso** algorithm from Sckit-learn library. You can reference its introduction ([Lasso](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html)) in Scikit-learn website.

++ Default parameter value needs to be set according to scikit-learn official documents also.

+

+

+

```

- """

+"""

Parameters

----------

-parameter:type,default = Dedault

+parameter: type,default = Dedault

References

----------

Scikit-learn API: sklearn.model.name

https://scikit-learn.org/......

```

-+ Parameters is in the source of the corresponding model on the official website of sklearn

-

-**eg:** Take the [Lasso algorithm](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html) as a column.

-

-

++ Parameters docstring are in the source code of the corresponding algorithm on the official website of sklearn.

-+ References is your model's official website.

-(4) The constructor of Base class is called

+(5) The constructor of Base class is called

```

super().__init__()

```

-(5) Initializes the instance's state by assigning the parameter values passed to the constructor to the instance's properties.

+

+(6) Initializes the instance's state by assigning the parameter values passed to the constructor to the instance's attributes.

```

self.parameter=parameter

```

-(6) Create the model and assign

+

+(7) Instantiate the algorithm class you depend on and assign. For example, `Lasso` from the library `sklearn.linear_model`.

```

-self.model=modelname(

+self.model = modelname(

parameter=self.parameter

)

```

-**Note:** Don't forget to import Model from scikit-learn

+**Note:** Don't forget to import the class from scikit-learn library

-(7) Define other class properties

+(8) Define the instance attribute `naming`

```

-self.properties=...

-```

-

-#### 2.1.3 Define manual_hyper_parameters

-manual_hyper_parameters gets the hyperparameter value by calling the manual hyperparameter function, and returns hyper_parameters.

+self.naming = Class.name

```

-hyper_parameters = name_manual_hyper_parameters()

-```

-+ This function calls the corresponding function in the `func` folder (needs to be written, see 2.2.2) to get the hyperparameter value.

-

-+ This function is called in the corresponding file of the `Process` folder (need to be written, see 2.3).

-+ Can be written with reference to similar classes

-

+This one will be use to print the name of the class and to activate the AutoML functionality. E.g, `self.naming = LassoRegression.name`. Further explaination is in section 2.2.

-#### 2.1.4 Define special_components

-Its purpose is to Invoke all special application functions for this algorithms by Scikit-learn framework.

-**Note:** The content of this part needs to be selected according to the actual situation of your own model.Can refer to similar classes.

-

-```

-GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH")

-```

-+ This line of code gets the image model output path from the environment variable.

-```

-GEOPI_OUTPUT_ARTIFACTS_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_PATH")

+(9) Define the instance attribute `customized` and `customized_name`

```

-+ This line of code takes the general output artifact path from the environment variable.

-**Note:** You need to choose to add the corresponding path according to the usage in the following functions.

-

-### 2.2 Add AutoML

-#### 2.2.1 Add AutoML code to class

-(1) Set AutoML related parameters

-```

- @property

- def settings(self) -> Dict:

- """The configuration of your model to implement AutoML by FLAML framework."""

- configuration = {

- "time_budget": '...'

- "metric": '...',

- "estimator_list": '...'

- "task": '...'

- }

- return configuration

+self.customized = True

+self.customized_name = "Algorithm Name"

```

-+ "time_budget" represents total running time in seconds

-+ "metric" represents Running metric

-+ "estimator_list" represents list of ML learners

-+ "task" represents task type

-**Note:** You can keep the parameters consistent, or you can modify them to make the AutoML better.

+These will be use to leverage the customization of AutlML functionality. E.g,`self.customized_name = "Lasso"`. Further explaination is in section 2.3.

-(2) Add parameters that need to be AutoML

-You can add the parameter tuning code according to the following code:

-```

- @property

- def customization(self) -> object:

- """The customized 'Your model' of FLAML framework."""

- from flaml import tune

- from flaml.data import 'TPYE'

- from flaml.model import SKLearnEstimator

- from sklearn.ensemble import 'model_name'

-

- class 'Model_Name'(SKLearnEstimator):

- def __init__(self, task=type, n_jobs=None, **config):

- super().__init__(task, **config)

- if task in 'TOYE':

- self.estimator_class = 'model_name'

-

- @classmethod

- def search_space(cls, data_size, task):

- space = {

- "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

- "'parameters2'": {"domain": tune.choice([True, False])},

- "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

- }

- return space

-

- return "Model_Name"

-```

-**Note1:** The content in ' ' needs to be modified according to your specific code

-**Note2:**

+(10) Define other instance attributes

```

- space = {

- "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

- "'parameters2'": {"domain": tune.choice([True, False])},

- "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

- }

+self.attributes=...

```

-+ tune.Uniform represents float

-+ tune.choice represents bool

-+ tune.randint represents int

-+ lower represents the minimum value of the range, upper represents the maximum value of the range, and init_value represents the initial value

-**Note:** You need to select parameters based on the actual situation of the model

-(3) Define special_components(FLAML)

-This part is the same as 2.1.4 as a whole, and can be modified with reference to it, but only the following two points need to be noted:

-a.The multi-dispatch function is different

-Scikit-learn framework:@dispatch()

-FLAML framework:@dispatch(bool)

+### 3.2 Add Manual Hyperparameter Tuning Functionality

-b.Added 'is_automl: bool' to the def

-**eg:**

-```

-Scikit-learn framework:

-def special_components(self, **kwargs) -> None:

+Our framework provides the user to set the algorithm hyperparameter manually or automiacally. In this part, we implement the manual functionality.

-FLAML framework:

-def special_components(self, is_automl: bool, **kwargs) -> None:

-```

-c.self.model has a different name

-**eg:**

-```

-Scikit-learn framework:

-coefficient=self.model.coefficient

-

-FLAML framework:

-coefficient=self.auto_model.coefficient

-```

+Sometimes the users want to input the hyperparameter values for model training manually, so you need to establish an interaction way to get the user's input.

-**Note:** You can refer to other similar codes to complete your code.

+#### 3.2.1 Define manual_hyper_parameters Method

-### 2.3 Get the hyperparameter value through interactive methods

-Sometimes the user wants to modify the hyperparameter values for model training, so you need to establish an interaction to get the user's modifications.

+The manual operation is control by the **manual_hyper_parameters** method. Inside this method, we encapsulate a lower level application function called algorithm_manual_hyper_parameters().

+```

+@classmethod

+def manual_hyper_parameters(cls) -> Dict:

+ """Manual hyper-parameters specification."""

+ print(f"-*-*- {cls.name} - Hyper-parameters Specification -*-*-")

+ hyper_parameters = algorithm_manual_hyper_parameters()

+ clear_output()

+ return hyper_parameters

+```

-#### 2.3.1 Find file

-You need to find the corresponding folder for model. The corresponding algorithm file is in the `func` folder in the model folder in the `data_mining` folder in the `geochemistrypi` folder.

++ The **manual_hyper_parameters** method is called in the corresponding mode operation file under the `geochemistrypi/data_mining/process` folder.

++ This lower level application function locates in the `geochemistrypi/data_mining/model/func/specific_mode` folder which limits the hyperparameters the user can set manually. E.g., If the model workflow class `LassoRegression` belongs to the regression mode, you need to add the `_lasso_regression.py` file under the folder `geochemistrypi/data_mining/model/func/algo_regression`. Here, `_lasso_regression.py` contains all encapsulated application functions specific to lasso algorithm.

-**eg:** If your model belongs to the regression, you need to add the `corresponding.py` file in the `alog_regression` folder.

+#### 3.2.2 Create `_algorithm.py` File

+(1) Create a _algorithm.py file

-#### 2.3.2 Create the .py file and add content

-(1) Create a .py file

**Note:** Keep name format consistent.

(2) Import module

@@ -283,395 +237,644 @@ from ....constants import SECTION

```

from ....data.data_readiness import bool_input, float_input, num_input

```

-+ This needs to choose the appropriate import according to the hyperparameter type of model interaction.

++ You needs to choose the appropriate common utility functions according to the input type of hyperparameter.

-(3) Define the function

+(3) Define the application function

```

-def name_manual_hyper_parameters() -> Dict:

+def algorithm_manual_hyper_parameters() -> Dict:

```

-**Note:** The name needs to be consistent with that in 2.1.3.

(4) Interactive format

```

-print("Hyperparameters: Role")

-print("Recommended value")

-Hyperparameters = type_input(Recommended value, SECTION[2], "@Hyperparameters: ")

+print("Hyperparameters: Explaination")

+print("A good starting value ...")

+Hyperparameters = type_input(Default Value, SECTION[2], "@Hyperparameters: ")

```

-**Note:** The recommended value needs to be the default value of the corresponding package.

+**Note:** You can query ChatGPT for the recommended good starting value. The default value can come from that one in the imported library. For example, check the default value of the specific parameter for `Lasso` algorithm in [Scikit-learn Website](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html).

(5) Integrate all hyperparameters into a dictionary type and return.

```

hyper_parameters = {

- "Hyperparameters1": Hyperparameters1,

- "Hyperparameters": Hyperparameters2,

+ "Hyperparameters1": Hyperparameters1,

+ "Hyperparameters": Hyperparameters2,

}

retuen hyper_parameters

```

-#### 2.3.3 Import in the file that defines the model class

+#### 3.2.3 Import in The Model Workflow Class File

```

-from .func.algo_regression.Name import name_manual_hyper_parameters

+from .func.algo_mode._algorithm.py import algorithm_manual_hyper_parameters

```

-**eg:**

-### 2.4 Call Model

-#### 2.4.1 Find file

-Call the model in the corresponding file in the `process` folder. The corresponding algorithm file is in the `process` folder in the` model` folder in the `data_mining` folder in the `geochemistrypi` folder.

+### 3.3 Add Automated Hyperparameter Tuning (AutoML) Functionality

-

+#### 3.3.1 Add AutoML Code to Model Workflow Class

-**eg:** If your model belongs to the regression,you need to call it in the regress.py file.

+Currently, only supervised learning modes (regression and classification) support AutoML. Hence, only the algorithm belonging to these two modes need to implment AutoML functionality.

-#### 2.4.2 Import module

-You need to add your model in the from ..model.regression import().

-```

-from ..model.regression import(

- ...

- NAME,

-)

-```

-**Note:** NAME needs to be the same as the NAME when defining the class in step 2.1.2.

-**eg:**

+Our framework leverages FLAML + Ray to build the AutoML functionality. For some algorithms, FLAML has encapsulated them. Hence, it is easy to operate with those built-in algorithm. However, some algorithms without encapsulation needs our customization on our own.

-

+There are three cases in total:

++ C1: Encapsulated -> FLAML (Good example: `XGBoostRegression` in `regression.py`)

++ C2: Unencapsulated -> FLAML (Good example: `SVMRegression` in `regression.py`)

++ C3: Unencapsulated -> FLAML + RAY (Good example: `MLPRegression` in `regression.py`)

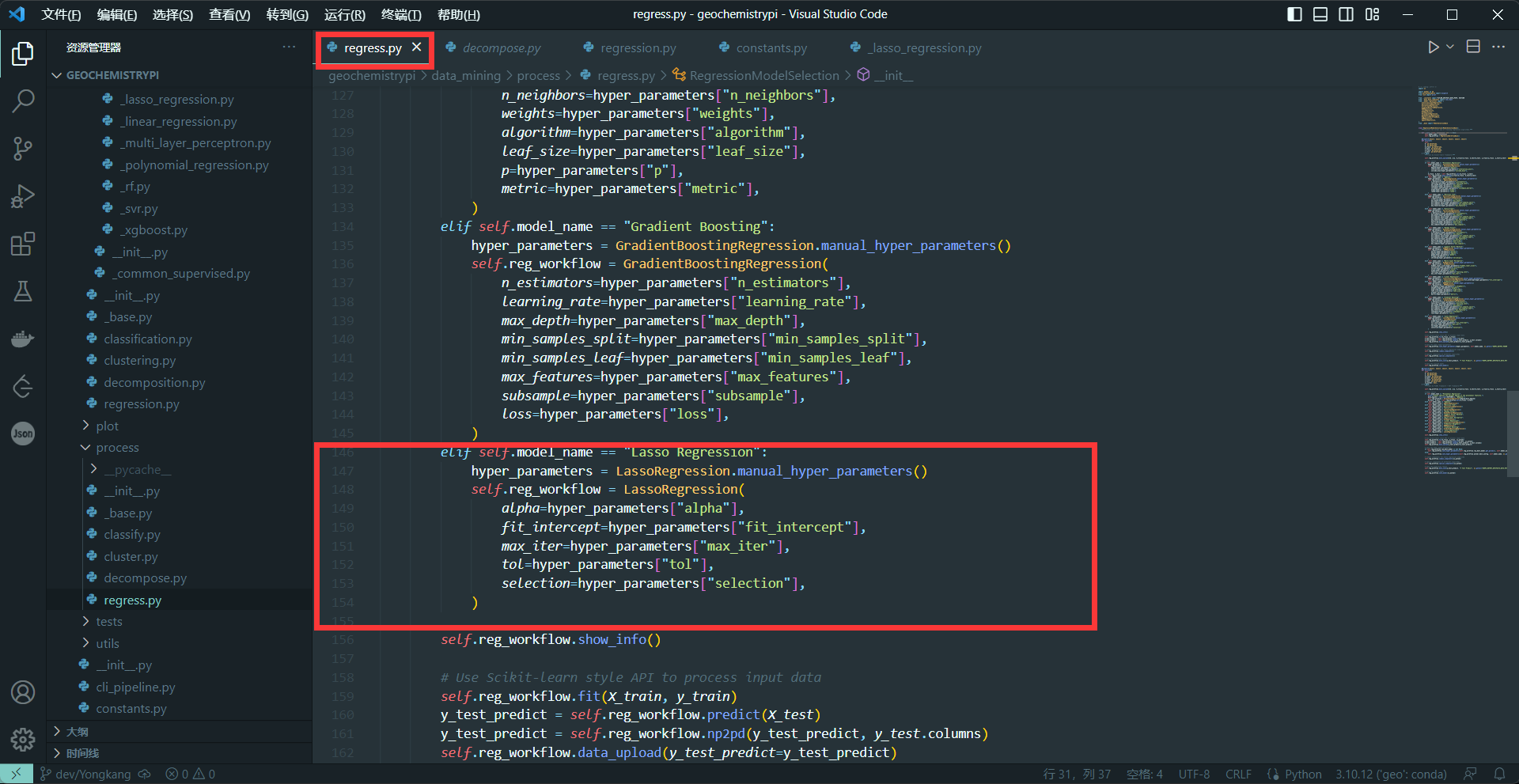

-#### 2.4.3 Call model

-There are two activate methods defined in the Regression and Classification algorithms, the first method uses the Scikit-learn framework, and the second method uses the FLAML and RAY frameworks. Decomposition and Clustering algorithms only use the Scikit-learn framework. Therefore, in the call, Regression and Classification need to add related codes to implement the call in both methods, and only one time is needed in Clustering and Decomposition.

+Here, we only talk about 2 cases, C1 and C2. C3 is a special case and it is only implemented in MLP algorithm.

-(1) Call model in the first activate method(Including Classification, Regression,Decomposition,Clustering)

-```

-elif self.model_name == "name":

- hyper_parameters = NAME.manual_hyper_parameters()

- self.dcp_workflow = NAME(

- Hyperparameters1=hyper_parameters["Hyperparameters2"],

- Hyperparameters1=hyper_parameters["Hyperparameters2"],

- ...

- )

-```

-+ The name needs to be the same as the name in 2.4

-+ The hyperparameters in NAME() are the hyperparameters obtained interactively in 2.2

-**eg:**

-

+Noted:

-(2)Call model in the second activate method(Including Classification, Regression)

++ The calling method **fit** is defined in the base class, hence, no need to define it again in the specific model workflow class. You can refrence the **fit** method of `RegressionWorkflowBase` in `regression.py`

+

+The following two steps is needed to implement AutoML functionality in the model workflow class. But for C1 it only requires the first step while C2 needs two step both.

+

+(1) Create `settings` method

```

-elif self.model_name == "name":

- self.reg_workflow = NAME()

+@property

+def settings(self) -> Dict:

+ """The configuration of your model to implement AutoML by FLAML framework."""

+ configuration = {

+ "time_budget": '...'

+ "metric": '...',

+ "estimator_list": '...'

+ "task": '...'

+ }

+ return configuration

```

-+ The name needs to be the same as the name in 2.4

-**eg:**

-

++ "time_budget" represents total running time in seconds

++ "metric" represents Running metric

++ "estimator_list" represents list of ML learners

++ "task" represents task type

-### 2.5 Add the algorithm list and set NON_AUTOML_MODELS

+For C1, the value of "estimator_list" should come from the specified name in [FLAML library](https://microsoft.github.io/FLAML/docs/Use-Cases/Task-Oriented-AutoML). For example, the specified name `xgboost` in the model workflow class `XGBoostRegression`. Also we need to put this specified value inside a list.

-#### 2.5.1 Find file

-Find the constants file to add the model name,The constants file is in the `data_mining` folder in the `geochemistrypi` folder.

+ -

+For C2, the value of "estimator_list" should be the instance attribute `self.customized_name`. For example, `self.customized_name = "SVR"` in the model workflow class `SVMRegression`. Also we need to put this specified value inside a list.

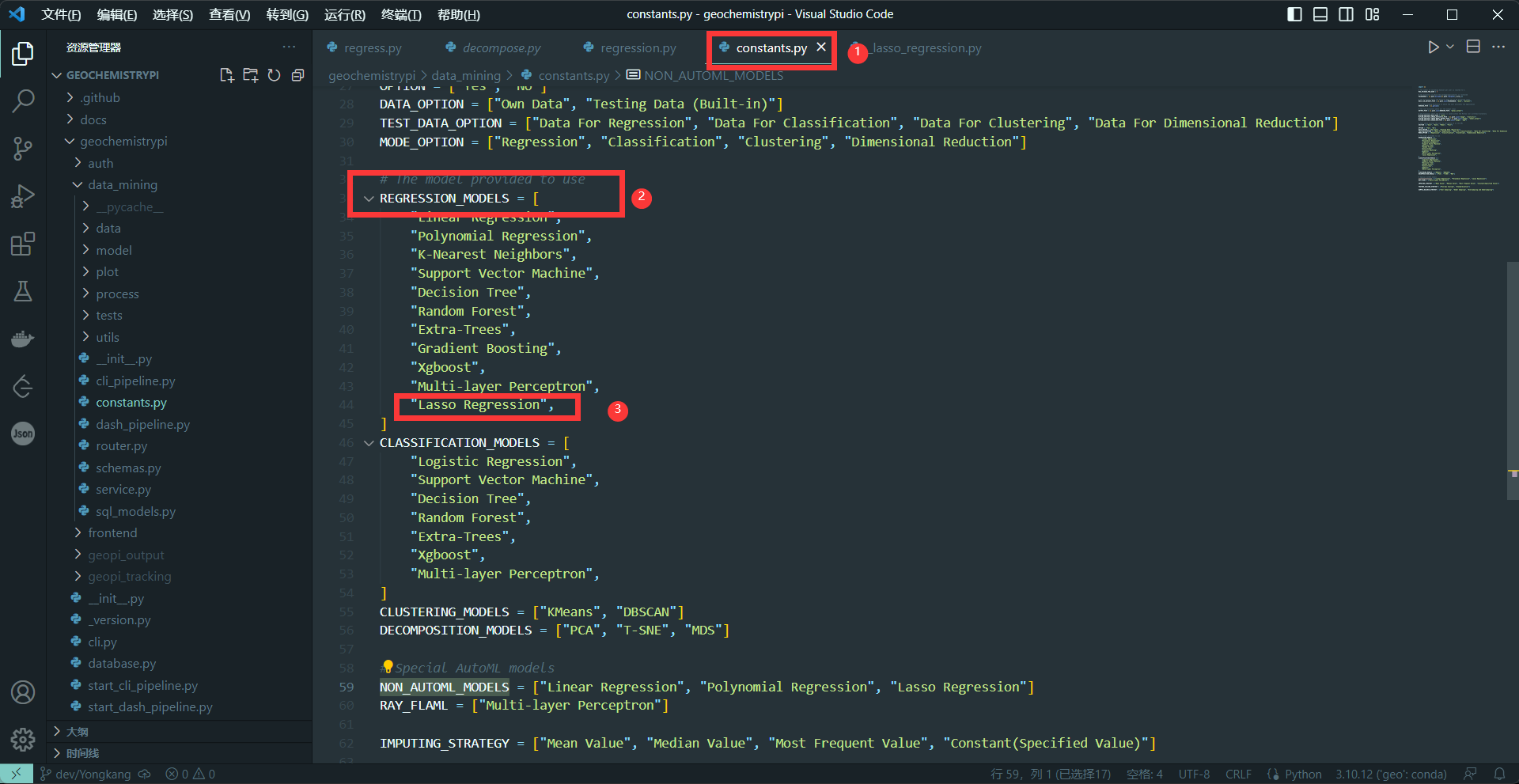

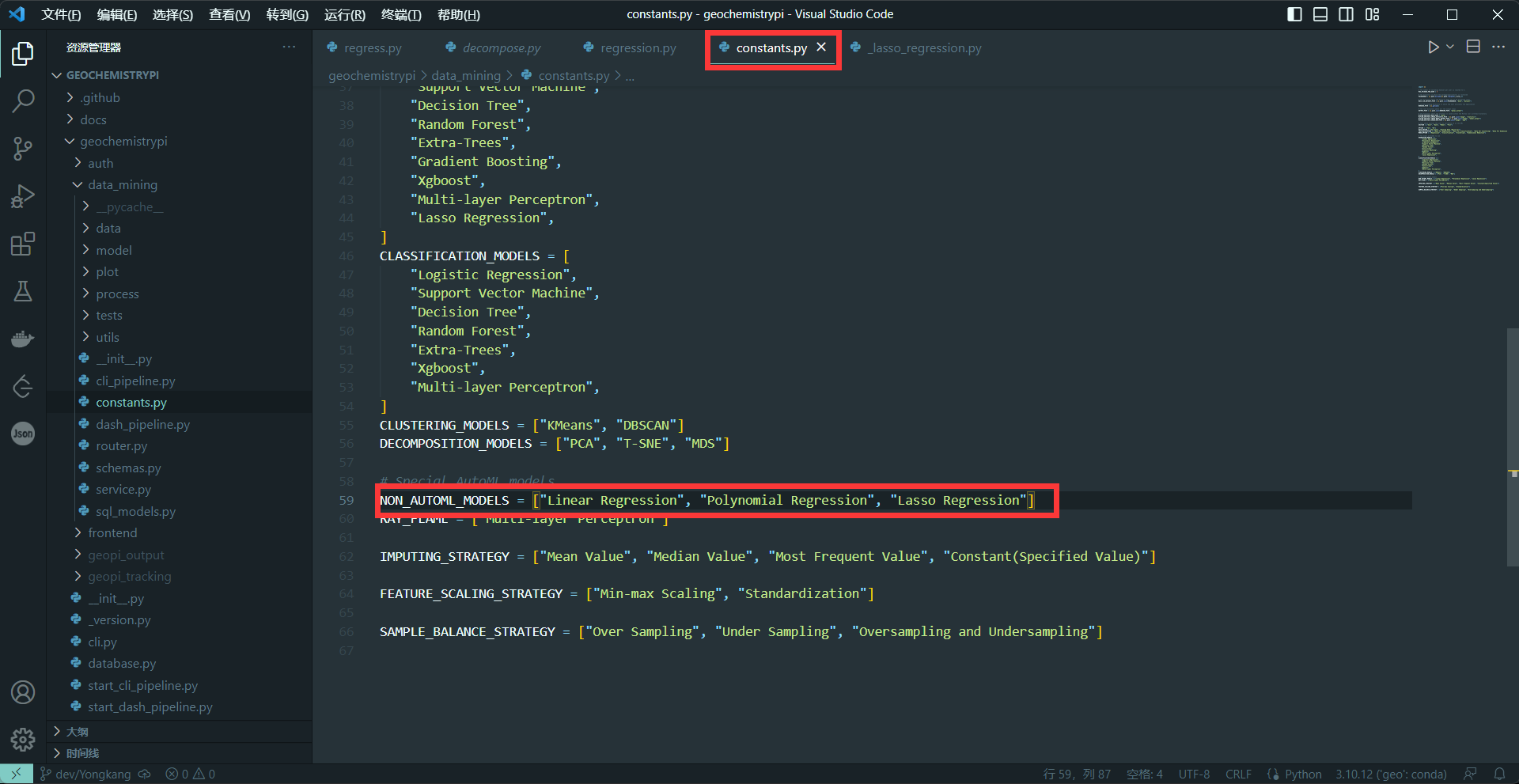

-(1) Add the model name

-Add model name to the algorithm list corresponding to the model in the constants file.

-**eg:** Add the name of the Lasso regression algorithm.

-

-

-(2)set NON_AUTOML_MODELS

-Because this is a tutorial without automatic parameters, you need to add the model name in the NON_AUTOML_MODELS.

-**eg:**

-

+**Note:** You can keep the other key-value pair consistent with other exited model workflow classes.

+(2) Create `customization` method

+You can add the parameter tuning code according to the following code:

+```

+@property

+def customization(self) -> object:

+ """The customized 'Your model' of FLAML framework."""

+ from flaml import tune

+ from flaml.data import 'TPYE'

+ from flaml.model import SKLearnEstimator

+ from 'sklearn' import 'model_name'

+

+ class 'Model_Name'(SKLearnEstimator):

+ def __init__(self, task=type, n_jobs=None, **config):

+ super().__init__(task, **config)

+ if task in 'TYPE':

+ self.estimator_class = 'model_name'

+

+ @classmethod

+ def search_space(cls, data_size, task):

+ space = {

+ "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

+ "'parameters2'": {"domain": tune.choice([True, False])},

+ "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

+ }

+ return space

+

+ return "Model_Name"

+```

+**Note1:** The content in ' ' needs to be modified according to your specific code. You can reference that one in the model workflow class `SVMRegression`.

+**Note2:**

+```

+space = {

+ "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

+ "'parameters2'": {"domain": tune.choice([True, False])},

+ "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

+}

+```

++ tune.uniform represents float

++ tune.choice represents bool

++ tune.randint represents int

++ lower represents the minimum value of the range, upper represents the maximum value of the range, and init_value represents the initial value

+**Note:** You need to select parameters based on the actual situation of the model

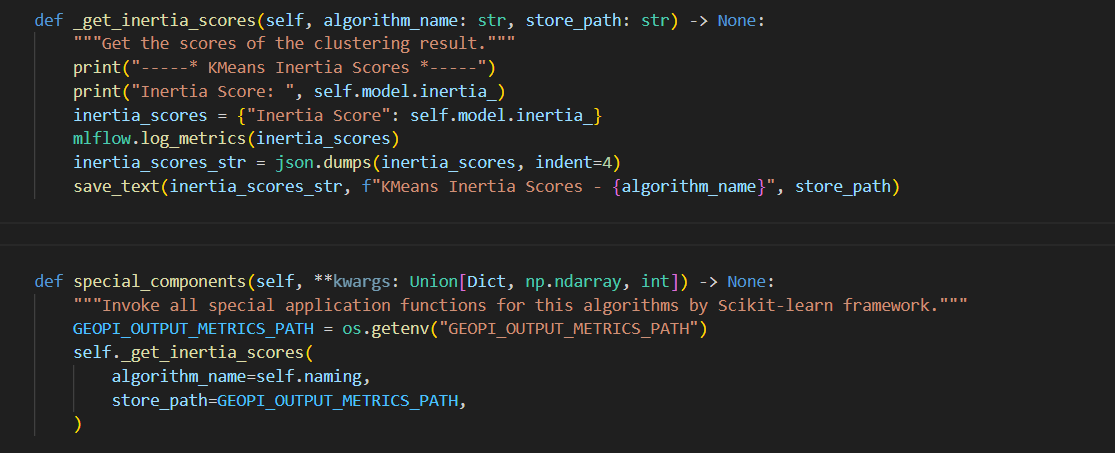

+### 3.4 Add Application Function to Model Workflow Class

-### 2.6 Add Functionality

+We treat the insightful outputs (index, scores) or diagrams to help to analyze and understand the algorithm as useful application. For example, XGBoost algorithm can produce feature importance score, hence, drawing feature importance diagram is an **application function** we can add to the model workflow class `XGBoostRegression`.

-#### 2.6.1 Model Research

+Conduct research on the corresponding model and look for its useful application functions that need to be added.

-Conduct research on the corresponding model and confirm the functions that need to be added.

++ You can confirm the functions that need to be added on the official website of the model (such as scikit learn), search engines (such as Google), chatGPT, etc.

-\+ You can confirm the functions that need to be added on the official website of the model (such as scikit learn), search engines (such as Google), chatGPT, etc.

+In our framework, we define two types of application function: **common application function** and **special application function**.

-(1) Common_component is a public function in a class, and all functions in each class can be used, so they need to be added in the parent class,Each of the parent classes can call Common_component.

+Common application function can be shared among the model workflow classes which belong to the same mode. It will be placed inside the base model workflow class. For example, `classification_report` is a common application function placed inside the base class `ClassificationWorkflowBase`. Notice that it is encapsulated in the **private** instance method `_classification_report`.

-(2) Special_component is unique to the model, so they need to be added in a specific model,Only they can use it.

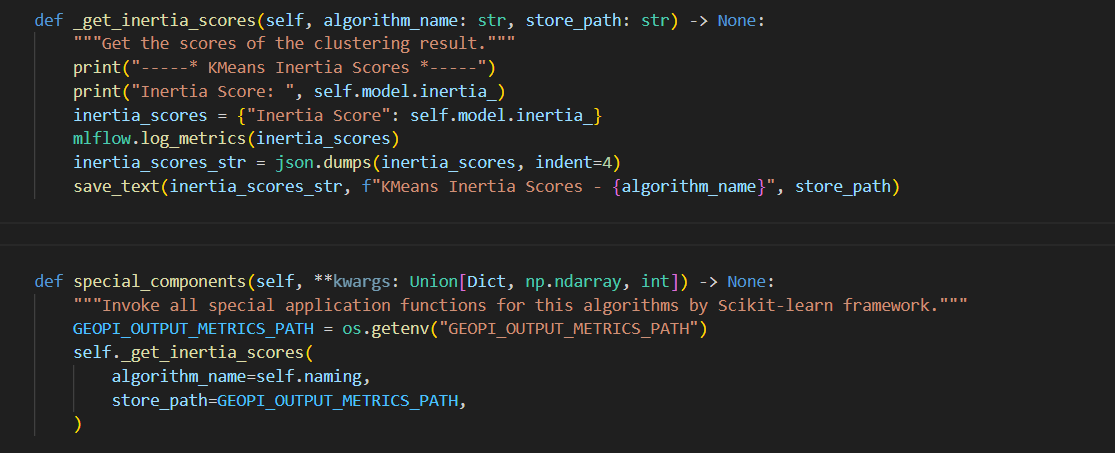

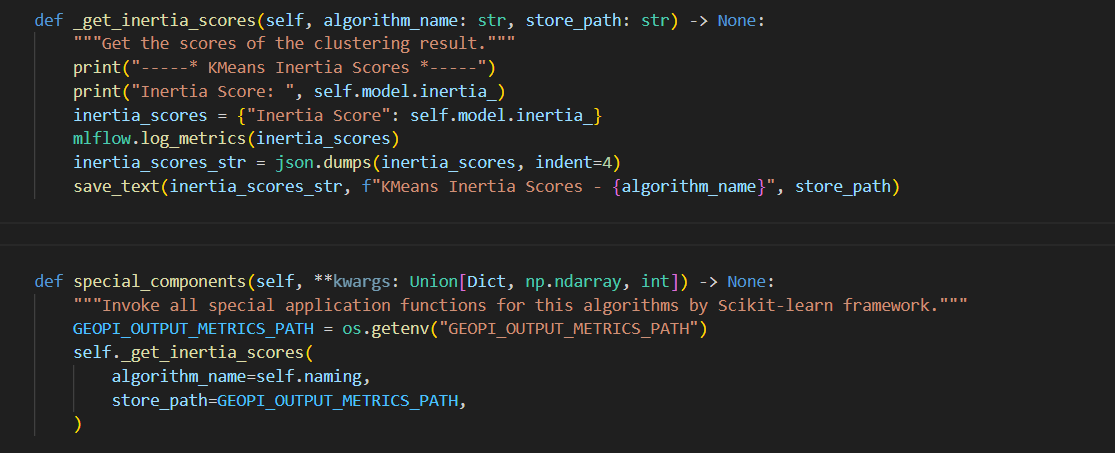

+Likewise, special application function is the special fucntionalities owned by the algorithm itself, hence it is placed inside a specific model workflow class. For example, for KMeans algorithm, we can get the inertia scores from it. Hence, inside the model workflow class `KMeansClustering`, we have a **private** instance method `_get_inertia_scores`.

-

+Now, the next question is how to invoke these application function in our framework.

+In fact, we put the invocation of the application function in the component method. Accordingly, we have two types of components:

+(1) `common_components` is a public method in the base class, and all common application functions will be invoked inside.

-#### 2.6.2 Add Common_component

+(2) `special_components` is unique to the algorithm, so they need to be added in a specific model workflow class. All special aaplication function related to this algorithm will be invoked inside.

-Common_component refer to functions that can be used by all internal submodels, so it is necessary to consider the situation of each submodel when adding them.

+

-***\*1. Add corresponding functionality to the parent class\****

+For more details, you can refer to the brief illustraion of the framework in section 1.

-Once you've identified the features you want to add, you can define the corresponding functions in the parent class.

+#### 3.4.1 Add Common Application Functions and `common_components` Method

-The code format is:

+`common_components` will invoke the common application functions used by all its children model workflow class, so it is necessary to consider the situation of each child model workflow class when adding a application function to it. The better way is to put the application function inside a specific child model workflow class firstly if you are not sure it can be classified as a common application function.

-(1) Define the function name and add the required parameters.

+**1. Add common application function to the base class**

-(2) Use annotations to describe function functionsUse annotations to describe function functions.

+Once you’ve identified the functionality you want to add, you can define the corresponding functions in the base class.

-(3) Referencing specific functions to implement functionality.

+The steps to implement are:

-(4) Change the format of data acquisition and save data or images.

+(1) Define the private function name and add the required parameters.

+(2) Use annotations to decorate the function.

+(3) Add the docstring to explain the use of this functionality.

+(4) Referencing specific libraries (e.g., Scikit-learn) to implement the functionality.

+(5) Change the format of data acquisition and save the produced data or images, etc.

+**2. Encapsulte the concrete code in Layer 1**

+Please refer to our framework's definition of **Layer 1** in section 1.

-***\*2. Define Common_component\****

+Some functions may use large code due to their complexity. To ensure the style and readability of the codebase, you need to put the specific function implementation into the corresponding `geochemistrypi/data_mining/model/func/mode/_common` files and call it.

-(1) Define the common_components in the parent class, its role is to set where the output is saved.

+The steps to implement are:

-(2) Set the parameter source for the added function.

+(1) Define the public function name, add the required parameters and proper decorator.

+(2) Add the docstring to explain the use of this functionality,the significance of each parameter and the related reference.

+(3) Implement functionality.

+(4) Returns the value used in **Layer 2**.

+**3. Define `common_components` Method**

+The steps to implement are:

-***\*3. Implement function functions\****

+(1) Define the path to store the data and images, etc.

+(2) Invoke the common application functions one by one.

-Some functions may use large code due to their complexity. To ensure the style and readability of the code, you need to put the specific function implementation into the corresponding `_common` files and call it.

+**4. Apeend The Name of Functionality in Class Attribute `common_function`**

-It includes:

+The steps to implement are:

-(1) Explain the significance of each parameter.

+(1) Create a class attribute `common_function` list in `ClusteringWorkflowBase`

+(2) Create a enum class to include the name of the functionality

+(3) Append the value of enum class into `common_function` list

-(2) Implement functionality.

+**Example**

-(3) Returns the required parameters.

+The following is the example of adding model evaluation score to the clustering base class.

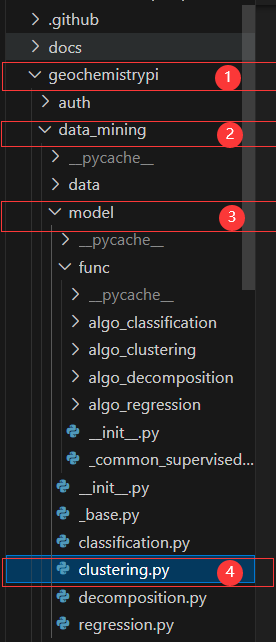

+First, you need to find the base class of clustering.

+

-***\*eg:\**** You want to add model evaluation to your clustering.

+

-

+For C2, the value of "estimator_list" should be the instance attribute `self.customized_name`. For example, `self.customized_name = "SVR"` in the model workflow class `SVMRegression`. Also we need to put this specified value inside a list.

-(1) Add the model name

-Add model name to the algorithm list corresponding to the model in the constants file.

-**eg:** Add the name of the Lasso regression algorithm.

-

-

-(2)set NON_AUTOML_MODELS

-Because this is a tutorial without automatic parameters, you need to add the model name in the NON_AUTOML_MODELS.

-**eg:**

-

+**Note:** You can keep the other key-value pair consistent with other exited model workflow classes.

+(2) Create `customization` method

+You can add the parameter tuning code according to the following code:

+```

+@property

+def customization(self) -> object:

+ """The customized 'Your model' of FLAML framework."""

+ from flaml import tune

+ from flaml.data import 'TPYE'

+ from flaml.model import SKLearnEstimator

+ from 'sklearn' import 'model_name'

+

+ class 'Model_Name'(SKLearnEstimator):

+ def __init__(self, task=type, n_jobs=None, **config):

+ super().__init__(task, **config)

+ if task in 'TYPE':

+ self.estimator_class = 'model_name'

+

+ @classmethod

+ def search_space(cls, data_size, task):

+ space = {

+ "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

+ "'parameters2'": {"domain": tune.choice([True, False])},

+ "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

+ }

+ return space

+

+ return "Model_Name"

+```

+**Note1:** The content in ' ' needs to be modified according to your specific code. You can reference that one in the model workflow class `SVMRegression`.

+**Note2:**

+```

+space = {

+ "'parameters1'": {"domain": tune.uniform(lower='...', upper='...'), "init_value": '...'},

+ "'parameters2'": {"domain": tune.choice([True, False])},

+ "'parameters3'": {"domain": tune.randint(lower='...', upper='...'), "init_value": '...'},

+}

+```

++ tune.uniform represents float

++ tune.choice represents bool

++ tune.randint represents int

++ lower represents the minimum value of the range, upper represents the maximum value of the range, and init_value represents the initial value

+**Note:** You need to select parameters based on the actual situation of the model

+### 3.4 Add Application Function to Model Workflow Class

-### 2.6 Add Functionality

+We treat the insightful outputs (index, scores) or diagrams to help to analyze and understand the algorithm as useful application. For example, XGBoost algorithm can produce feature importance score, hence, drawing feature importance diagram is an **application function** we can add to the model workflow class `XGBoostRegression`.

-#### 2.6.1 Model Research

+Conduct research on the corresponding model and look for its useful application functions that need to be added.

-Conduct research on the corresponding model and confirm the functions that need to be added.

++ You can confirm the functions that need to be added on the official website of the model (such as scikit learn), search engines (such as Google), chatGPT, etc.

-\+ You can confirm the functions that need to be added on the official website of the model (such as scikit learn), search engines (such as Google), chatGPT, etc.

+In our framework, we define two types of application function: **common application function** and **special application function**.

-(1) Common_component is a public function in a class, and all functions in each class can be used, so they need to be added in the parent class,Each of the parent classes can call Common_component.

+Common application function can be shared among the model workflow classes which belong to the same mode. It will be placed inside the base model workflow class. For example, `classification_report` is a common application function placed inside the base class `ClassificationWorkflowBase`. Notice that it is encapsulated in the **private** instance method `_classification_report`.

-(2) Special_component is unique to the model, so they need to be added in a specific model,Only they can use it.

+Likewise, special application function is the special fucntionalities owned by the algorithm itself, hence it is placed inside a specific model workflow class. For example, for KMeans algorithm, we can get the inertia scores from it. Hence, inside the model workflow class `KMeansClustering`, we have a **private** instance method `_get_inertia_scores`.

-

+Now, the next question is how to invoke these application function in our framework.

+In fact, we put the invocation of the application function in the component method. Accordingly, we have two types of components:

+(1) `common_components` is a public method in the base class, and all common application functions will be invoked inside.

-#### 2.6.2 Add Common_component

+(2) `special_components` is unique to the algorithm, so they need to be added in a specific model workflow class. All special aaplication function related to this algorithm will be invoked inside.

-Common_component refer to functions that can be used by all internal submodels, so it is necessary to consider the situation of each submodel when adding them.

+

-***\*1. Add corresponding functionality to the parent class\****

+For more details, you can refer to the brief illustraion of the framework in section 1.

-Once you've identified the features you want to add, you can define the corresponding functions in the parent class.

+#### 3.4.1 Add Common Application Functions and `common_components` Method

-The code format is:

+`common_components` will invoke the common application functions used by all its children model workflow class, so it is necessary to consider the situation of each child model workflow class when adding a application function to it. The better way is to put the application function inside a specific child model workflow class firstly if you are not sure it can be classified as a common application function.

-(1) Define the function name and add the required parameters.

+**1. Add common application function to the base class**

-(2) Use annotations to describe function functionsUse annotations to describe function functions.

+Once you’ve identified the functionality you want to add, you can define the corresponding functions in the base class.

-(3) Referencing specific functions to implement functionality.

+The steps to implement are:

-(4) Change the format of data acquisition and save data or images.

+(1) Define the private function name and add the required parameters.

+(2) Use annotations to decorate the function.

+(3) Add the docstring to explain the use of this functionality.

+(4) Referencing specific libraries (e.g., Scikit-learn) to implement the functionality.

+(5) Change the format of data acquisition and save the produced data or images, etc.

+**2. Encapsulte the concrete code in Layer 1**

+Please refer to our framework's definition of **Layer 1** in section 1.

-***\*2. Define Common_component\****

+Some functions may use large code due to their complexity. To ensure the style and readability of the codebase, you need to put the specific function implementation into the corresponding `geochemistrypi/data_mining/model/func/mode/_common` files and call it.

-(1) Define the common_components in the parent class, its role is to set where the output is saved.

+The steps to implement are:

-(2) Set the parameter source for the added function.

+(1) Define the public function name, add the required parameters and proper decorator.

+(2) Add the docstring to explain the use of this functionality,the significance of each parameter and the related reference.

+(3) Implement functionality.

+(4) Returns the value used in **Layer 2**.

+**3. Define `common_components` Method**

+The steps to implement are:

-***\*3. Implement function functions\****

+(1) Define the path to store the data and images, etc.

+(2) Invoke the common application functions one by one.

-Some functions may use large code due to their complexity. To ensure the style and readability of the code, you need to put the specific function implementation into the corresponding `_common` files and call it.

+**4. Apeend The Name of Functionality in Class Attribute `common_function`**

-It includes:

+The steps to implement are:

-(1) Explain the significance of each parameter.

+(1) Create a class attribute `common_function` list in `ClusteringWorkflowBase`

+(2) Create a enum class to include the name of the functionality

+(3) Append the value of enum class into `common_function` list

-(2) Implement functionality.

+**Example**

-(3) Returns the required parameters.

+The following is the example of adding model evaluation score to the clustering base class.

+First, you need to find the base class of clustering.

+

-***\*eg:\**** You want to add model evaluation to your clustering.

+ -First, you need to find the parent class to clustering.

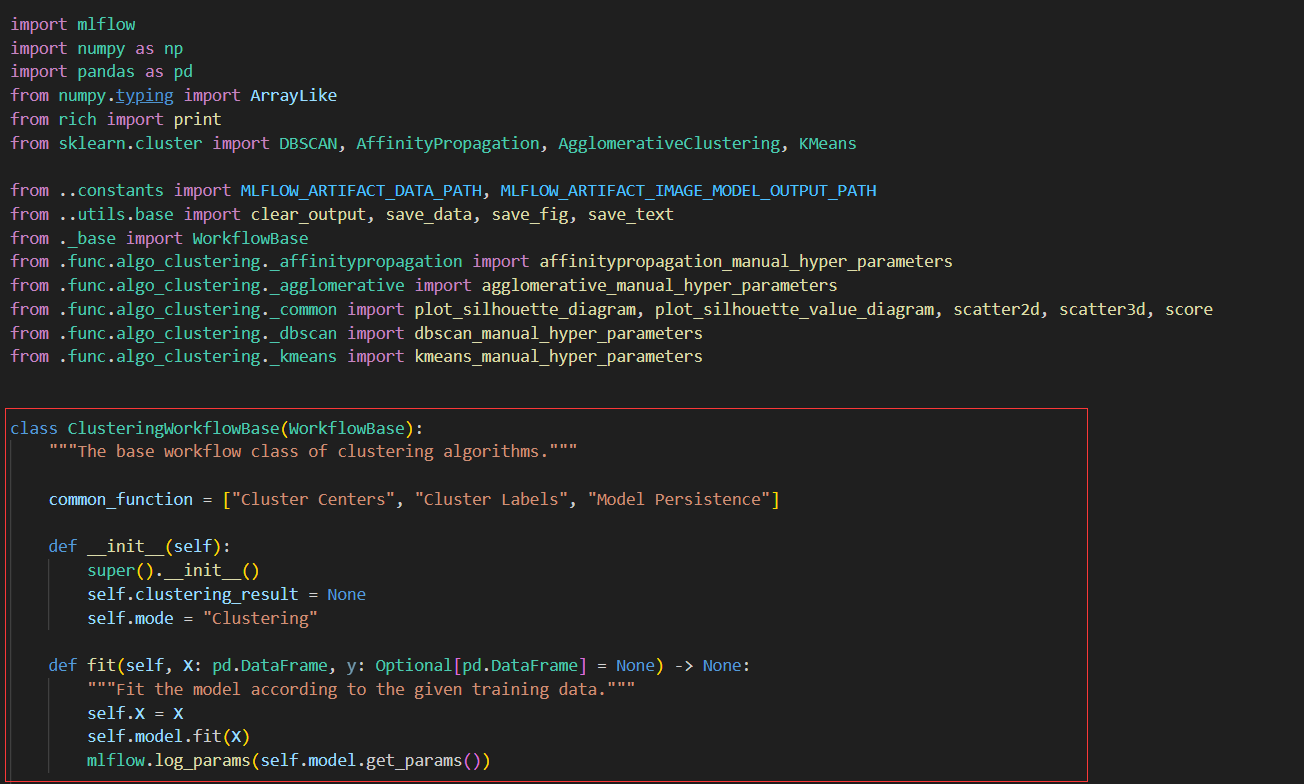

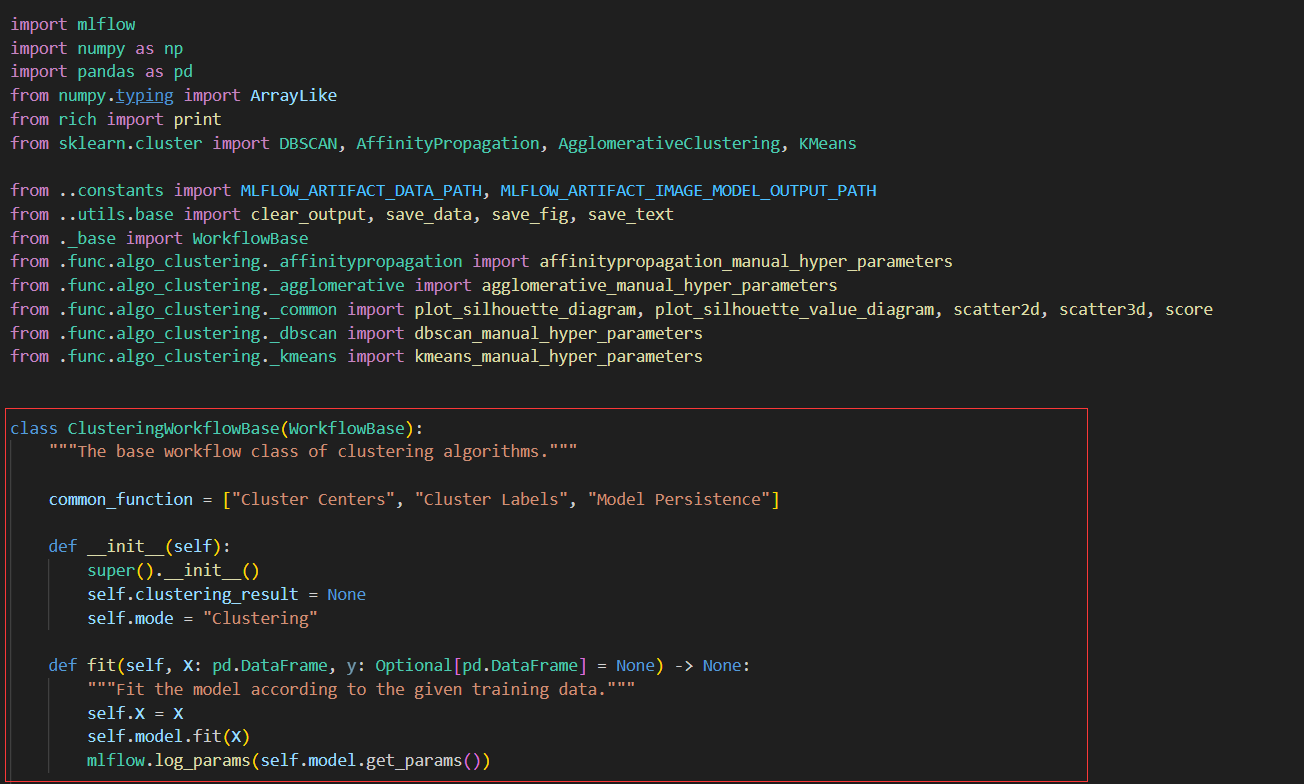

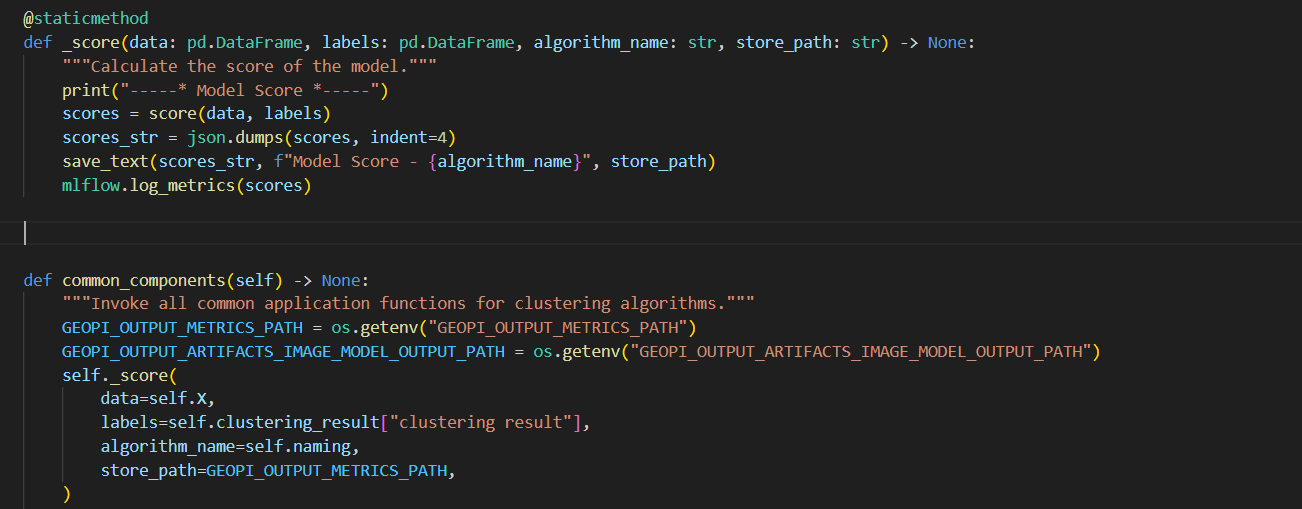

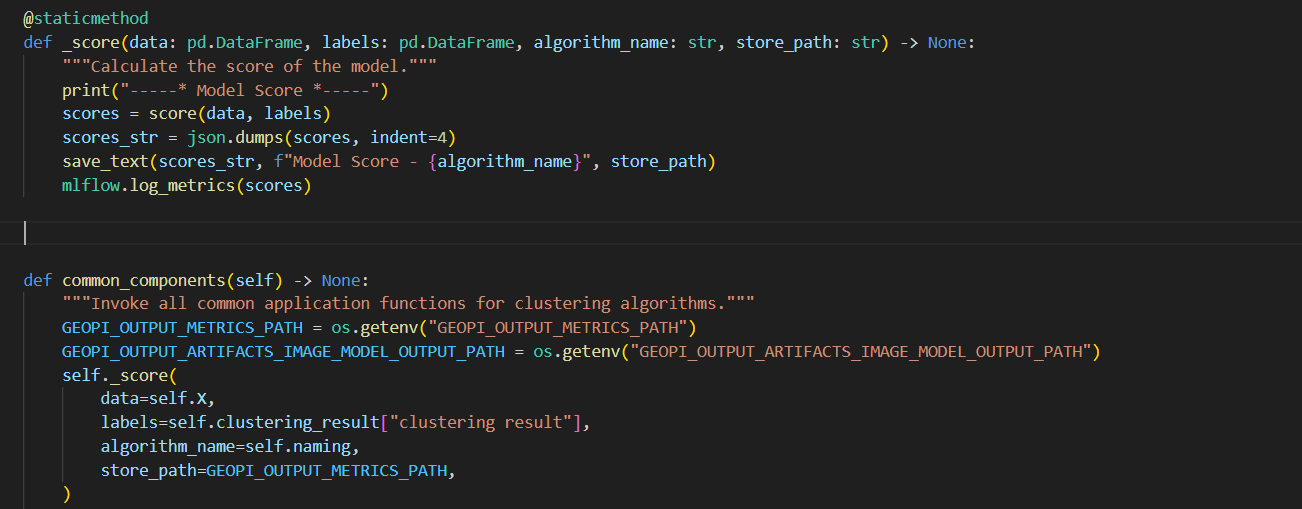

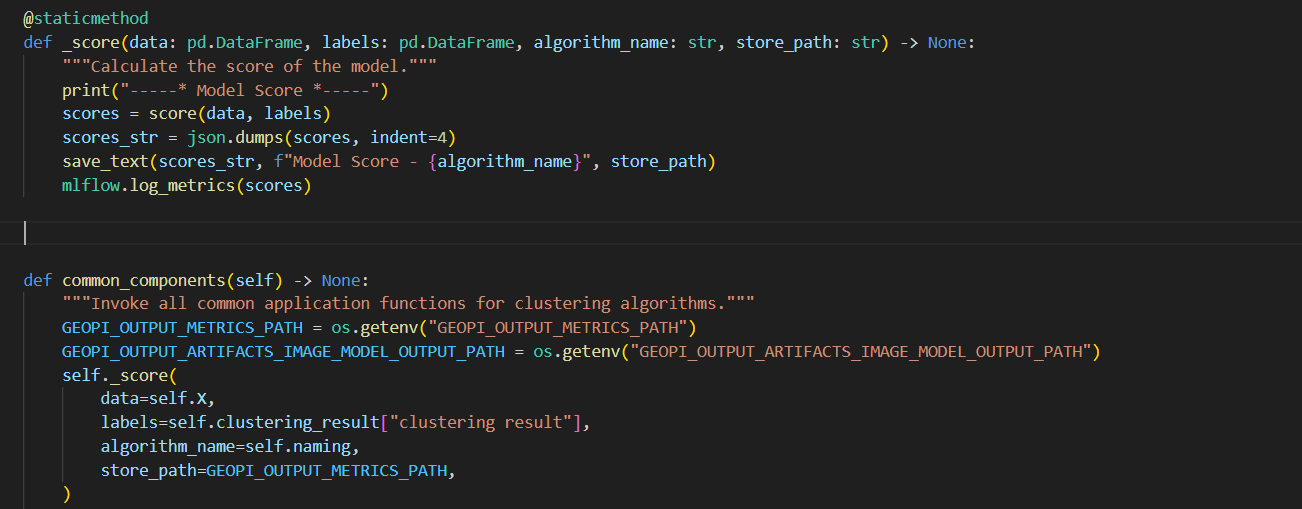

+**1. Add `_score` function in base class `ClusteringWorkflowBase(WorkflowBase)`**

-

+```python

+@staticmethod

+def _score(data: pd.DataFrame, labels: pd.DataFrame, func_name: str, algorithm_name: str, store_path: str) -> None:

+ """Calculate the score of the model."""

+ print(f"-----* {func_name} *-----")

+ scores = score(data, labels)

+ scores_str = json.dumps(scores, indent=4)

+ save_text(scores_str, f"{func_name}- {algorithm_name}", store_path)

+ mlflow.log_metrics(scores)

+```

-

+**2. Encapsulte the concrete code of `score` in Layer 1**

-***\*1. Add the clustering score function in class ClusteringWorkflowBase (WorkflowBase).\****

+You need to add the specific function implementation `score` to the corresponding `geochemistrypi/data_mining/model/func/algo_clustering/_common` file.

+

```python

+def score(data: pd.DataFrame, labels: pd.DataFrame) -> Dict:

+ """Calculate the scores of the clustering model.

-@staticmethod

-def _score(data: pd.DataFrame, labels: pd.DataFrame, algorithm_name: str, store_path: str) -> None:

+ Parameters

+ ----------

+ data : pd.DataFrame (n_samples, n_components)

+ The true values.

- """Calculate the score of the model."""

+ labels : pd.DataFrame (n_samples, n_components)

+ Labels of each point.

+

+ Returns

+ -------

+ scores : dict

+ The scores of the clustering model.

+ """

+ silhouette = silhouette_score(data, labels)

+ calinski_harabaz = calinski_harabasz_score(data, labels)

+ print("silhouette_score: ", silhouette)

+ print("calinski_harabasz_score:", calinski_harabaz)

+ scores = {

+ "silhouette_score": silhouette,

+ "calinski_harabasz_score": calinski_harabaz,

+ }

+ return scores

+```

- print("-----* Model Score *-----")

+**3. Define `common_components` Method in class `ClusteringWorkflowBase(WorkflowBase)`**

- scores = score(data, labels)

+```python

+def common_components(self) -> None:

+ """Invoke all common application functions for clustering algorithms."""

+ GEOPI_OUTPUT_METRICS_PATH = os.getenv("GEOPI_OUTPUT_METRICS_PATH")

+ GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH")

+ self._score(

+ data=self.X,

+ labels=self.clustering_result["clustering result"],

+ func_name=ClusteringCommonFunction.MODEL_SCORE.value,

+ algorithm_name=self.naming,

+ store_path=GEOPI_OUTPUT_METRICS_PATH,

+ )

+```

- scores_str = json.dumps(scores, indent=4)

+**4. Apeend The Name of Functionality in Class Attribute `common_function`**

- save_text(scores_str, f"Model Score - {algorithm_name}", store_path)

+Create a class attribute `common_function` in `ClusteringWorkflowBase`.

- mlflow.log_metrics(scores)

+```

+class ClusteringWorkflowBase(WorkflowBase):

+ """The base workflow class of clustering algorithms."""

+ common_function = [func.value for func in ClusteringCommonFunction]

```

+The enum class should be put in the corresponding path `geochemistrypi/data-mining/model/func/algo_clustering/_enum.py`

-(1) Define the function name and add the required parameters.

+

-First, you need to find the parent class to clustering.

+**1. Add `_score` function in base class `ClusteringWorkflowBase(WorkflowBase)`**

-

+```python

+@staticmethod

+def _score(data: pd.DataFrame, labels: pd.DataFrame, func_name: str, algorithm_name: str, store_path: str) -> None:

+ """Calculate the score of the model."""

+ print(f"-----* {func_name} *-----")

+ scores = score(data, labels)

+ scores_str = json.dumps(scores, indent=4)

+ save_text(scores_str, f"{func_name}- {algorithm_name}", store_path)

+ mlflow.log_metrics(scores)

+```

-

+**2. Encapsulte the concrete code of `score` in Layer 1**

-***\*1. Add the clustering score function in class ClusteringWorkflowBase (WorkflowBase).\****

+You need to add the specific function implementation `score` to the corresponding `geochemistrypi/data_mining/model/func/algo_clustering/_common` file.

+

```python

+def score(data: pd.DataFrame, labels: pd.DataFrame) -> Dict:

+ """Calculate the scores of the clustering model.

-@staticmethod

-def _score(data: pd.DataFrame, labels: pd.DataFrame, algorithm_name: str, store_path: str) -> None:

+ Parameters

+ ----------

+ data : pd.DataFrame (n_samples, n_components)

+ The true values.

- """Calculate the score of the model."""

+ labels : pd.DataFrame (n_samples, n_components)

+ Labels of each point.

+

+ Returns

+ -------

+ scores : dict

+ The scores of the clustering model.

+ """

+ silhouette = silhouette_score(data, labels)

+ calinski_harabaz = calinski_harabasz_score(data, labels)

+ print("silhouette_score: ", silhouette)

+ print("calinski_harabasz_score:", calinski_harabaz)

+ scores = {

+ "silhouette_score": silhouette,

+ "calinski_harabasz_score": calinski_harabaz,

+ }

+ return scores

+```

- print("-----* Model Score *-----")

+**3. Define `common_components` Method in class `ClusteringWorkflowBase(WorkflowBase)`**

- scores = score(data, labels)

+```python

+def common_components(self) -> None:

+ """Invoke all common application functions for clustering algorithms."""

+ GEOPI_OUTPUT_METRICS_PATH = os.getenv("GEOPI_OUTPUT_METRICS_PATH")

+ GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH = os.getenv("GEOPI_OUTPUT_ARTIFACTS_IMAGE_MODEL_OUTPUT_PATH")

+ self._score(

+ data=self.X,

+ labels=self.clustering_result["clustering result"],

+ func_name=ClusteringCommonFunction.MODEL_SCORE.value,

+ algorithm_name=self.naming,

+ store_path=GEOPI_OUTPUT_METRICS_PATH,

+ )

+```

- scores_str = json.dumps(scores, indent=4)

+**4. Apeend The Name of Functionality in Class Attribute `common_function`**

- save_text(scores_str, f"Model Score - {algorithm_name}", store_path)

+Create a class attribute `common_function` in `ClusteringWorkflowBase`.

- mlflow.log_metrics(scores)

+```

+class ClusteringWorkflowBase(WorkflowBase):

+ """The base workflow class of clustering algorithms."""

+ common_function = [func.value for func in ClusteringCommonFunction]

```