We read every piece of feedback, and take your input very seriously.

To see all available qualifiers, see our documentation.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Greetings,

I'm currently trying to compile the following model:

import brevitas.nn as qnn import torch.nn as nn from brevitas.quant import ( Int8ActPerTensorFixedPoint as ActQuant, Int8WeightPerTensorFixedPoint as WeightQuant, ) class ExtendedActQuant(ActQuant): bit_width = 16 class ExtendedWeightQuant(WeightQuant): bit_width = 16 class QSPTModel(nn.Module): def __init__(self, in_channels: int, image_size: int, convolution_sizes: list, dense_sizes: list, output_size: int) -> None: super(QSPTModel, self).__init__() self.identity = qnn.QuantIdentity( act_quant=ExtendedActQuant, return_quant_tensor=True ) self.layers = nn.Sequential() in_size = in_channels for idx, size in enumerate(convolution_sizes, 1): self.layers.add_module( name=f"conv_{idx}", module=qnn.QuantConv2d( in_size, size, kernel_size=(3, 3), padding_type="same", weight_quant=ExtendedWeightQuant, return_quant_tensor=True ) ) self.layers.add_module( name=f"conv_{idx}_relu", module=qnn.QuantReLU( act_quant=ExtendedActQuant, return_quant_tensor=True ) ) self.layers.add_module( name=f"maxpool_{idx}", module=qnn.QuantMaxPool2d( (2, 2), return_quant_tensor=True ) ) in_size = size self.layers.add_module(name="flatten", module=nn.Flatten()) # the convolution layers squeeze the image of a factor 2 # after len(convolution_sizes) layers, the final size will be # convolution_sizes[-1]*((image_size / (2**len(convolution_sizes)))**2) maxpool_final_size = image_size // (2**len(convolution_sizes)) in_size = convolution_sizes[-1]*(maxpool_final_size*maxpool_final_size) for idx, size in enumerate(dense_sizes, 1): self.layers.add_module( name=f"linear_{idx}", module=qnn.QuantLinear( in_size, size, bias=True, weight_quant=ExtendedWeightQuant, return_quant_tensor=True ) ) self.layers.add_module( name=f"linear_{idx}_relu", module=qnn.QuantReLU( act_quant=ExtendedActQuant, return_quant_tensor=True ) ) in_size = size self.layers.add_module( name=f"linear_output", module=qnn.QuantLinear( in_size, output_size, bias=True, weight_quant=ExtendedWeightQuant, return_quant_tensor=False ) ) def forward(self, x): x = self.identity(x) x = self.layers(x) return x

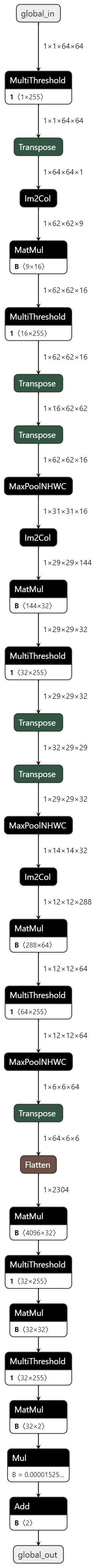

After some tidy-up transformations, this is the output of the streamlining step:

Afterwards, I try to apply the transformations step for creating a dataflow partition as follows:

import finn.transformation.fpgadataflow.convert_to_hls_layers as to_hls from finn.transformation.fpgadataflow.create_dataflow_partition import ( CreateDataflowPartition, ) from finn.transformation.move_reshape import RemoveCNVtoFCFlatten from qonnx.custom_op.registry import getCustomOp from qonnx.transformation.infer_data_layouts import InferDataLayouts chkpt_name = build_dir + model_name + streamline + ".onnx" model = ModelWrapper(chkpt_name) model = model.transform(absorb.AbsorbSignBiasIntoMultiThreshold()) model = model.transform(to_hls.InferBinaryMatrixVectorActivation(mem_mode)) model = model.transform(to_hls.InferQuantizedMatrixVectorActivation(mem_mode)) # TopK to LabelSelect model = model.transform(to_hls.InferLabelSelectLayer()) # input quantization (if any) to standalone thresholding model = model.transform(to_hls.InferThresholdingLayer()) model = model.transform(to_hls.InferConvInpGen()) model = model.transform(to_hls.InferStreamingMaxPool()) # get rid of Reshape(-1, 1) operation between hlslib nodes model = model.transform(RemoveCNVtoFCFlatten()) # get rid of Tranpose -> Tranpose identity seq model = model.transform(absorb.AbsorbConsecutiveTransposes()) # infer tensor data layouts model = model.transform(InferDataLayouts()) parent_model = model.transform(CreateDataflowPartition())

This causes the following exception:

--------------------------------------------------------------------------- AssertionError Traceback (most recent call last) <ipython-input-17-a35fcd046467> in <module> 12 model = model.transform(absorb.AbsorbSignBiasIntoMultiThreshold()) 13 model = model.transform(to_hls.InferBinaryMatrixVectorActivation(mem_mode)) ---> 14 model = model.transform(to_hls.InferQuantizedMatrixVectorActivation(mem_mode)) 15 # TopK to LabelSelect 16 model = model.transform(to_hls.InferLabelSelectLayer()) /home/jacopo/git/finn/deps/qonnx/src/qonnx/core/modelwrapper.py in transform(self, transformation, make_deepcopy, cleanup) 138 model_was_changed = True 139 while model_was_changed: --> 140 (transformed_model, model_was_changed) = transformation.apply(transformed_model) 141 if cleanup: 142 transformed_model.cleanup() /home/jacopo/git/finn/src/finn/transformation/fpgadataflow/convert_to_hls_layers.py in apply(self, model) 785 + ": out_scale=1 or bipolar output needed for conversion." 786 ) --> 787 assert (not odt.signed()) or (actval < 0), ( 788 consumer.name + ": Signed output requres actval < 0" 789 ) AssertionError: MultiThreshold_1: Signed output requres actval < 0

I checked the existing issues and this exception is mentioned in issue #337 but for a different transformation step.

FINN branch: main (v0.8.1) OS: Windows 10 (WSL2 Ubuntu 18.04) Python: 3.8

The text was updated successfully, but these errors were encountered:

Hello @jacopoabramo ,

Have you solved it? Could you explain it please?

Bests,

Sorry, something went wrong.

Hi @Ba1tu3han, I haven't been working on this for a long time now so I really couldn't say.

No branches or pull requests

Greetings,

I'm currently trying to compile the following model:

After some tidy-up transformations, this is the output of the streamlining step:

Afterwards, I try to apply the transformations step for creating a dataflow partition as follows:

This causes the following exception:

I checked the existing issues and this exception is mentioned in issue #337 but for a different transformation step.

FINN branch: main (v0.8.1)

OS: Windows 10 (WSL2 Ubuntu 18.04)

Python: 3.8

The text was updated successfully, but these errors were encountered: